Merge pull request #2664 from microsoft/v1.7

V1.7 merge back to master

Showing

24.7 KB

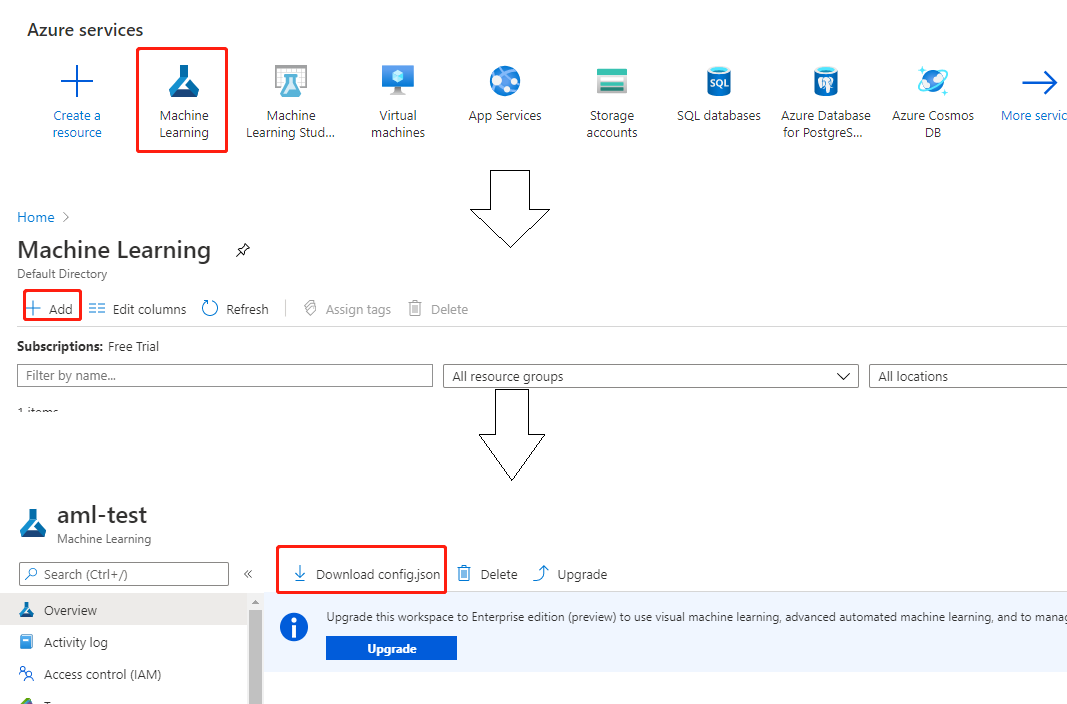

docs/img/aml_cluster.png

0 → 100644

15.1 KB

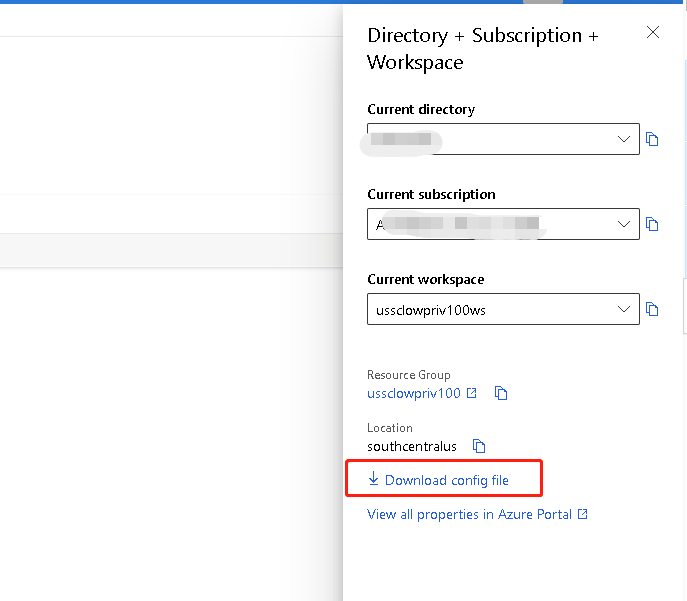

docs/img/aml_workspace.png

0 → 100644

43.4 KB

32 KB

31.6 KB

26.8 KB

examples/nas/benchmarks/nasbench101.sh

100644 → 100755