Merge branch 'master' into v2.0-merge

Showing

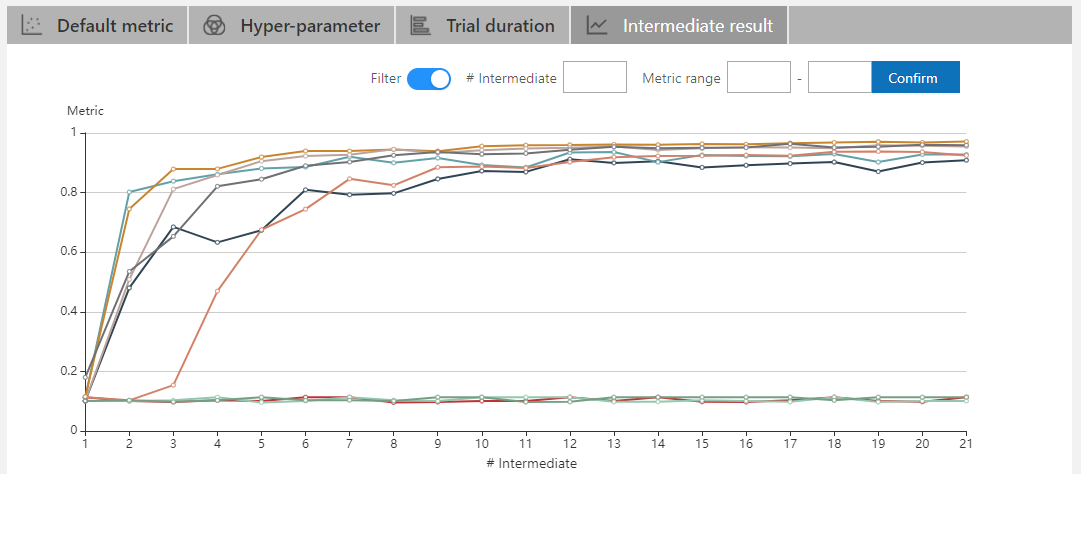

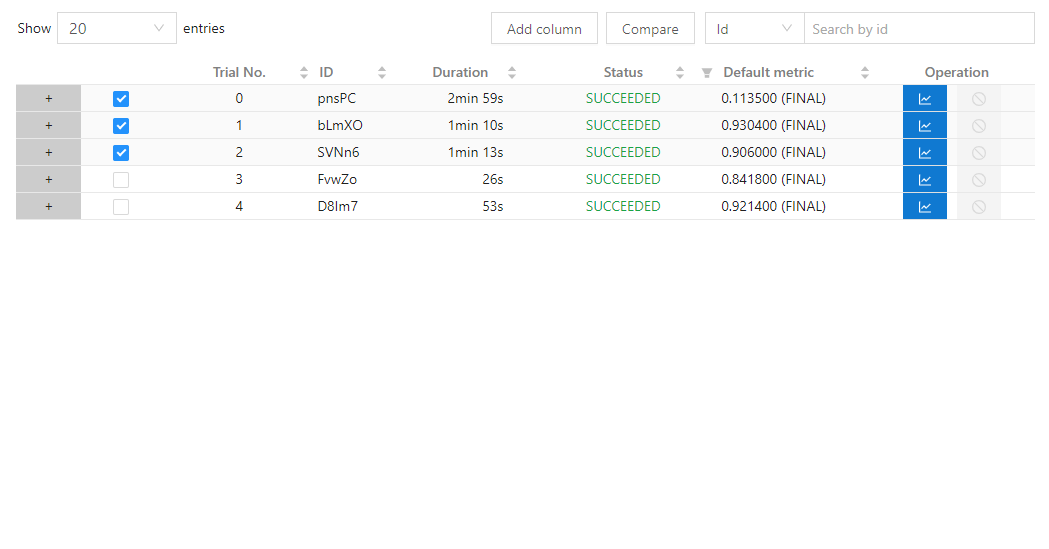

24.4 KB

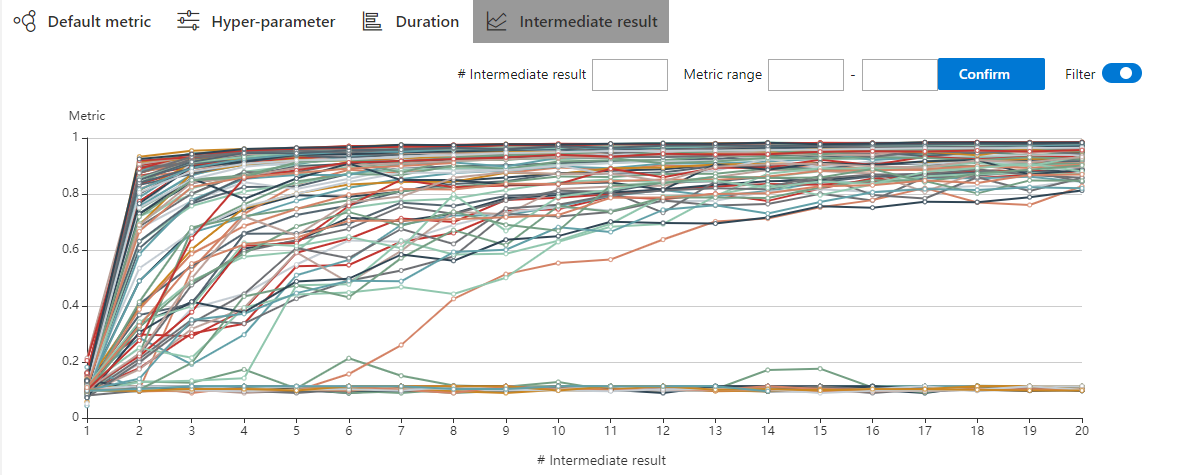

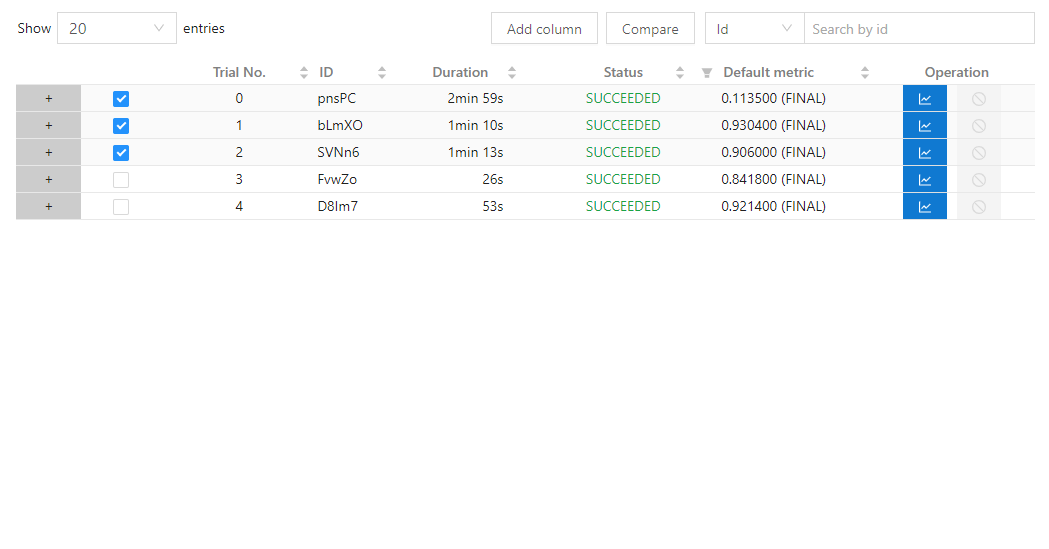

22.1 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

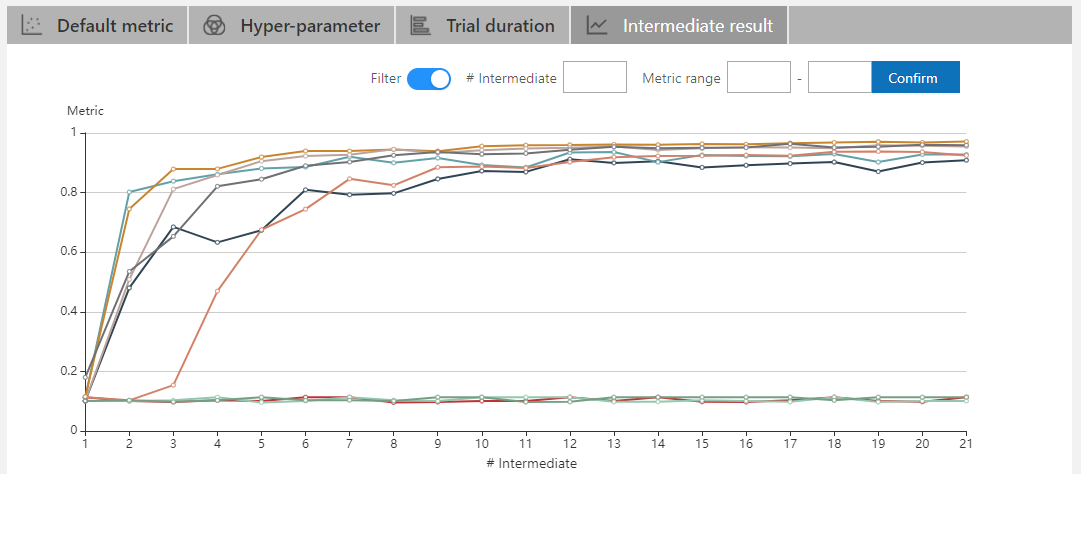

22 KB

| W: | H:

| W: | H:

24.4 KB

22.1 KB

21.6 KB | W: | H:

18 KB | W: | H:

22.3 KB | W: | H:

22.7 KB | W: | H:

22 KB

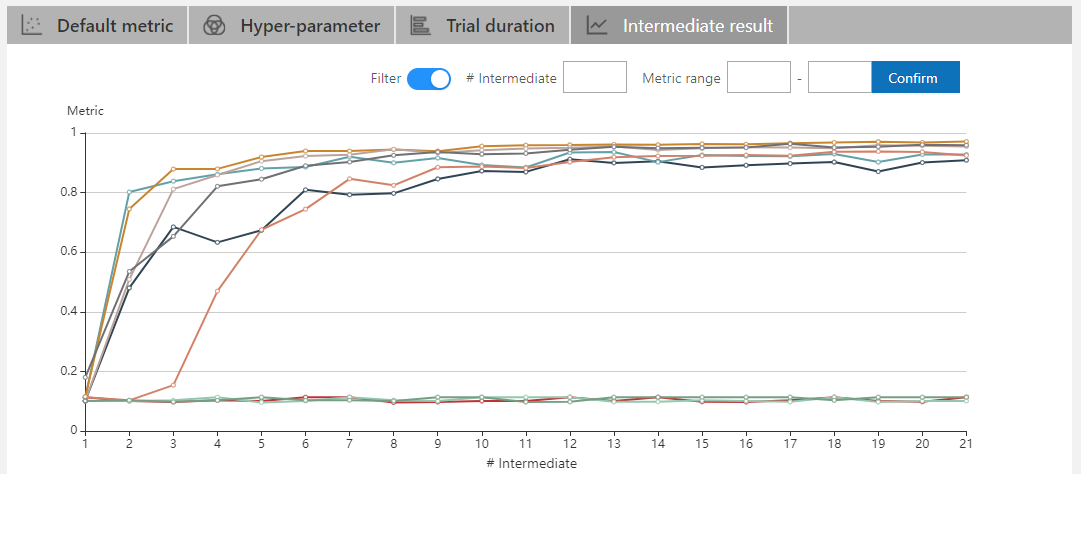

67.1 KB | W: | H:

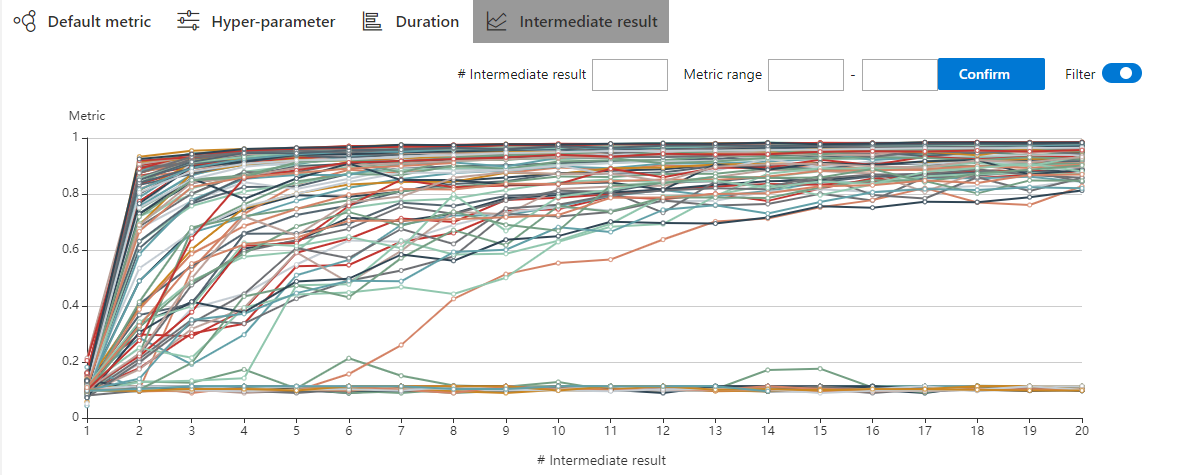

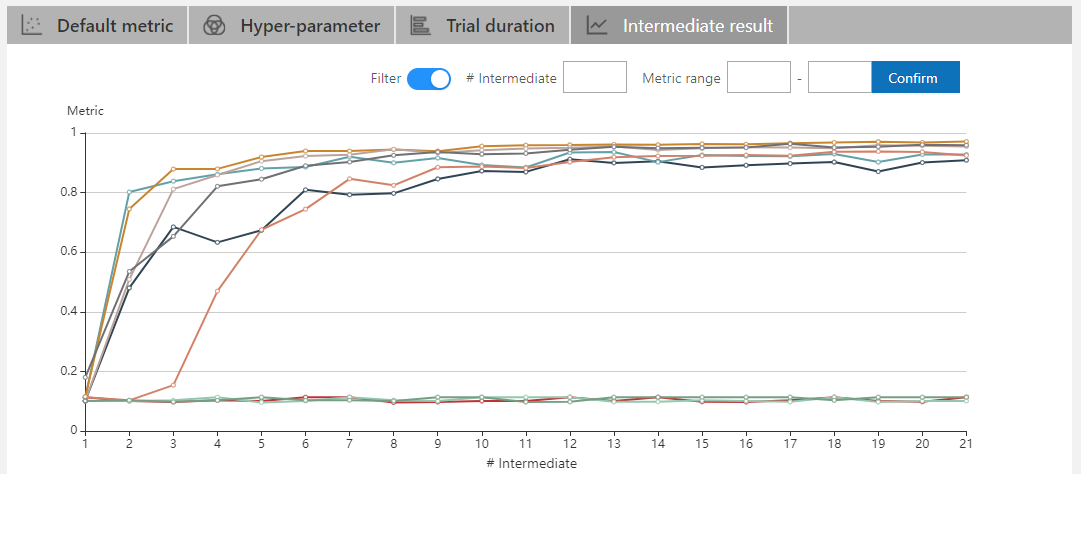

243 KB | W: | H: