DLTS integration (#1945)

* skeleton of dlts training service (#1844)

* Hello, DLTS!

* Revert version

* Remove fs-extra

* Add some default cluster config

* schema

* fix

* Optional cluster (default to `.default`)

Depends on DLWorkspace#837

* fix

* fix

* optimize gpu type

* No more copy

* Format

* Code clean up

* Issue fix

* Add optional fields in config

* Issue fix

* Lint

* Lint

* Validate email, password and team

* Doc

* Doc fix

* Set TMPDIR

* Use metadata instead of gpu_capacity

* Cancel paused DLTS job

* workaround lint rules

* pylint

* doc

Co-authored-by:  QuanluZhang <z.quanluzhang@gmail.com>

QuanluZhang <z.quanluzhang@gmail.com>

Showing

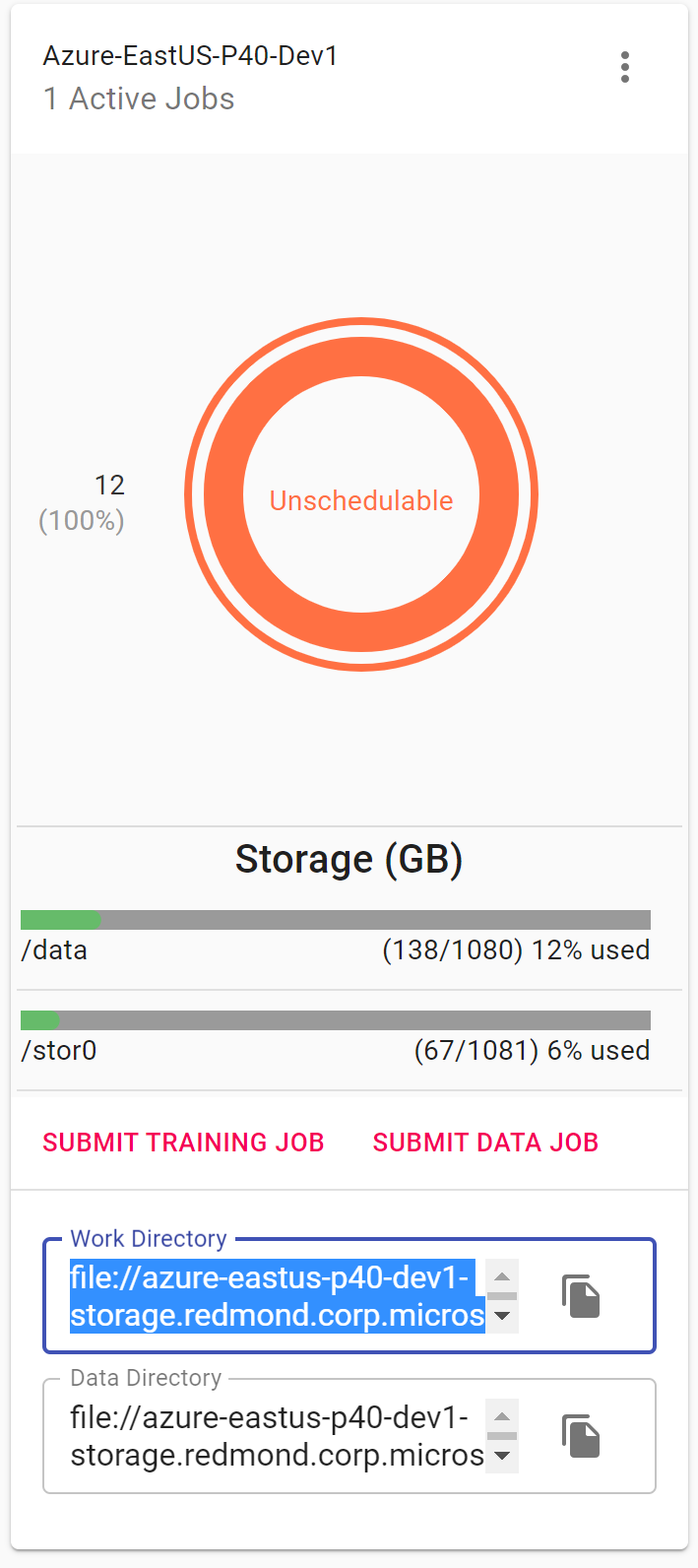

docs/img/dlts-step1.png

0 → 100644

83.7 KB

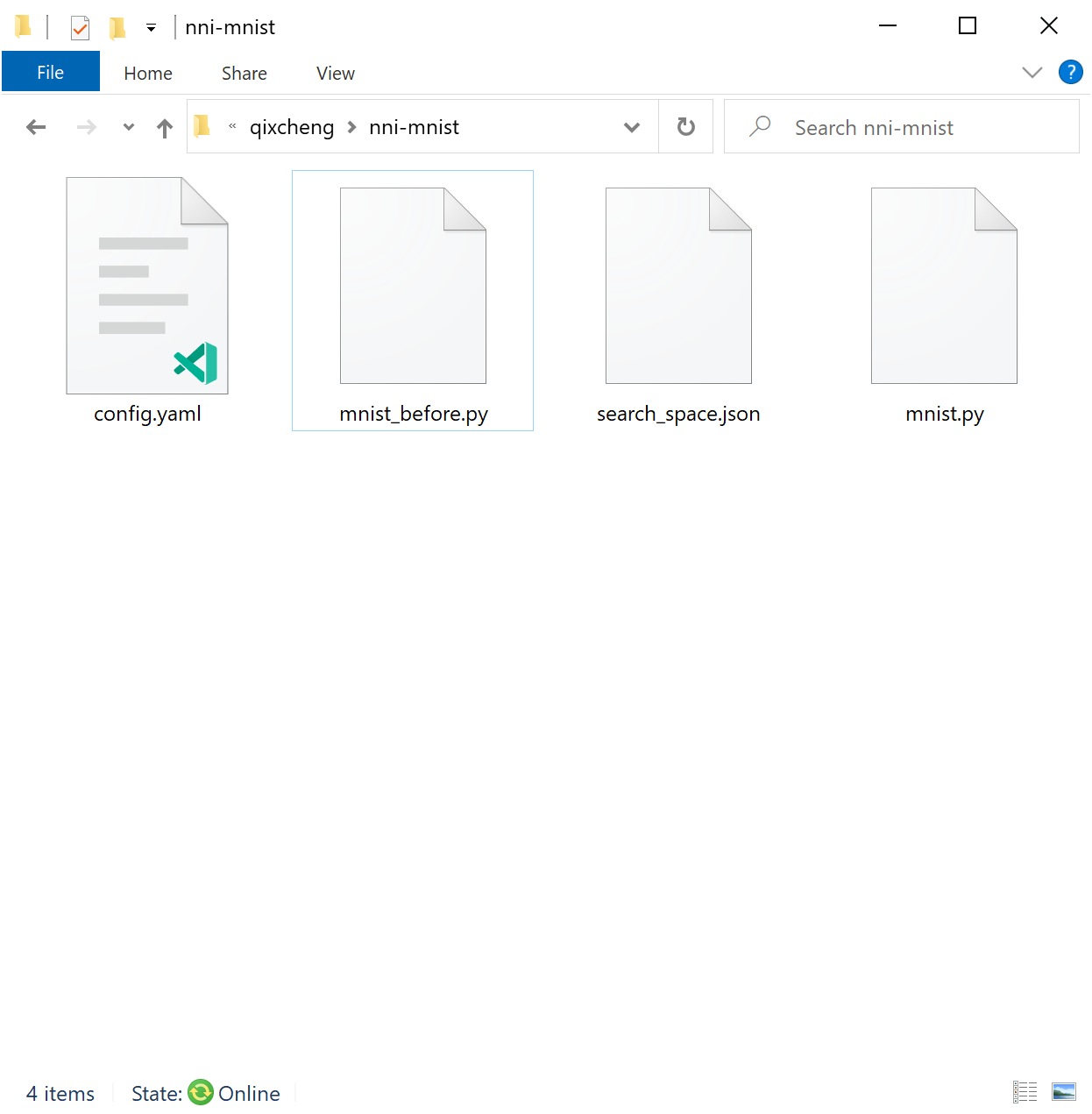

docs/img/dlts-step3.png

0 → 100644

56 KB

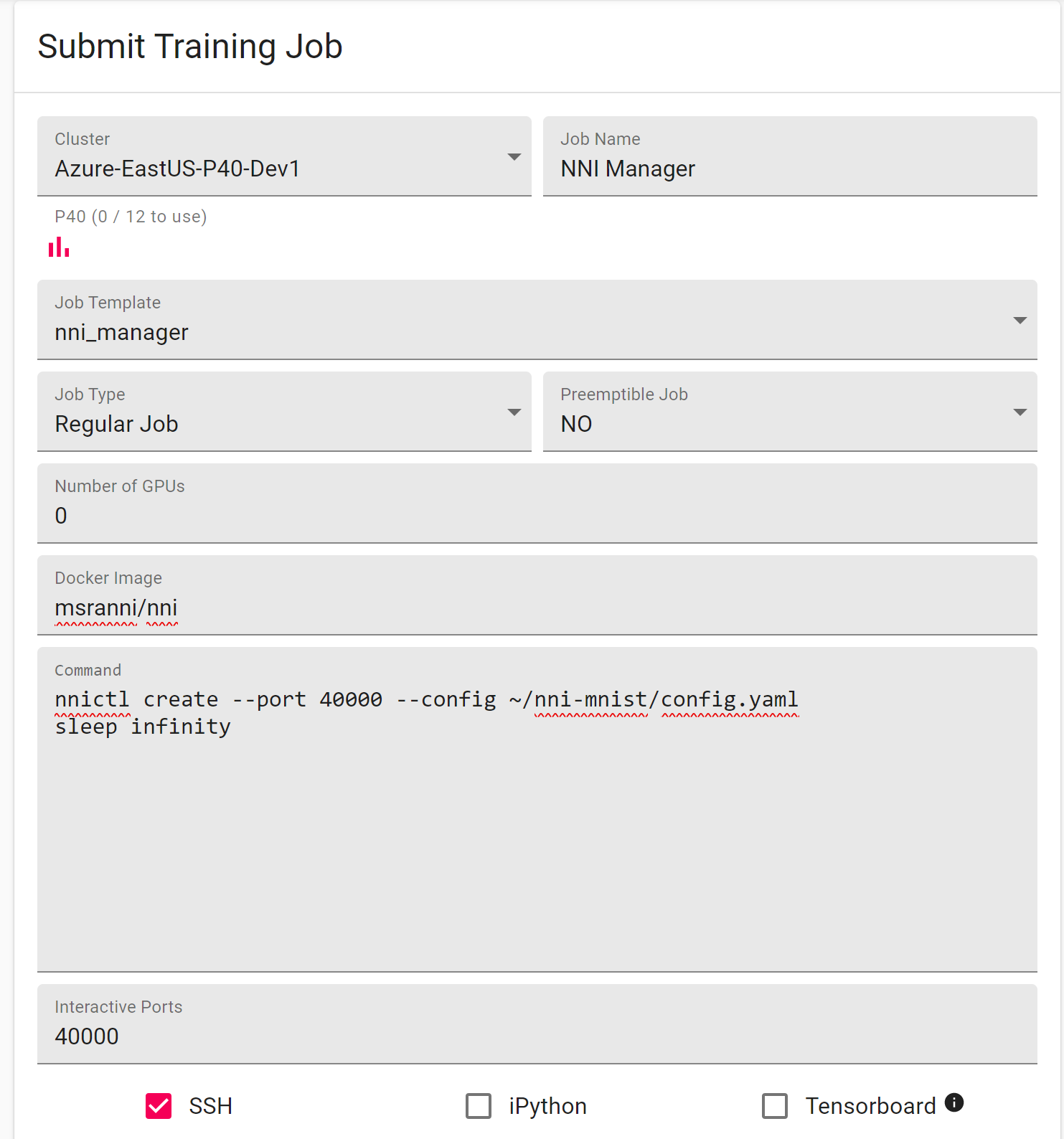

docs/img/dlts-step4.png

0 → 100644

73.9 KB

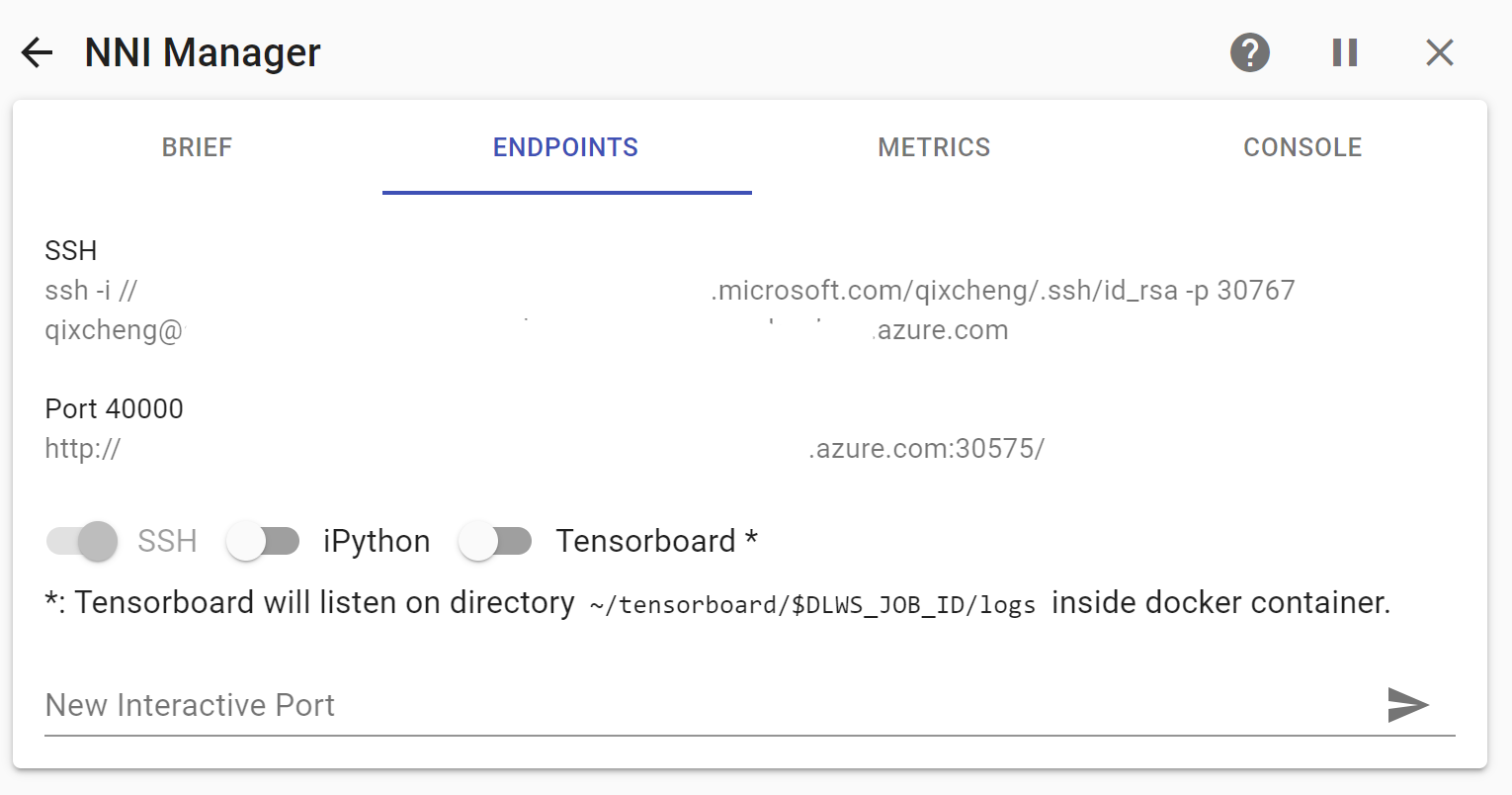

docs/img/dlts-step5.png

0 → 100644

77.5 KB

This diff is collapsed.