Merge pull request #4291 from microsoft/v2.5

merge v2.5 back to master

Showing

252 KB

docs/img/emoicons/Crying.png

0 → 100644

275 KB

docs/img/emoicons/Cut.png

0 → 100644

468 KB

docs/img/emoicons/Error.png

0 → 100644

200 KB

604 KB

docs/img/emoicons/NoBug.png

0 → 100644

214 KB

docs/img/emoicons/Sign.png

0 → 100644

244 KB

docs/img/emoicons/Sweat.png

0 → 100644

239 KB

421 KB

285 KB

docs/img/emoicons/home.svg

0 → 100644

This diff is collapsed.

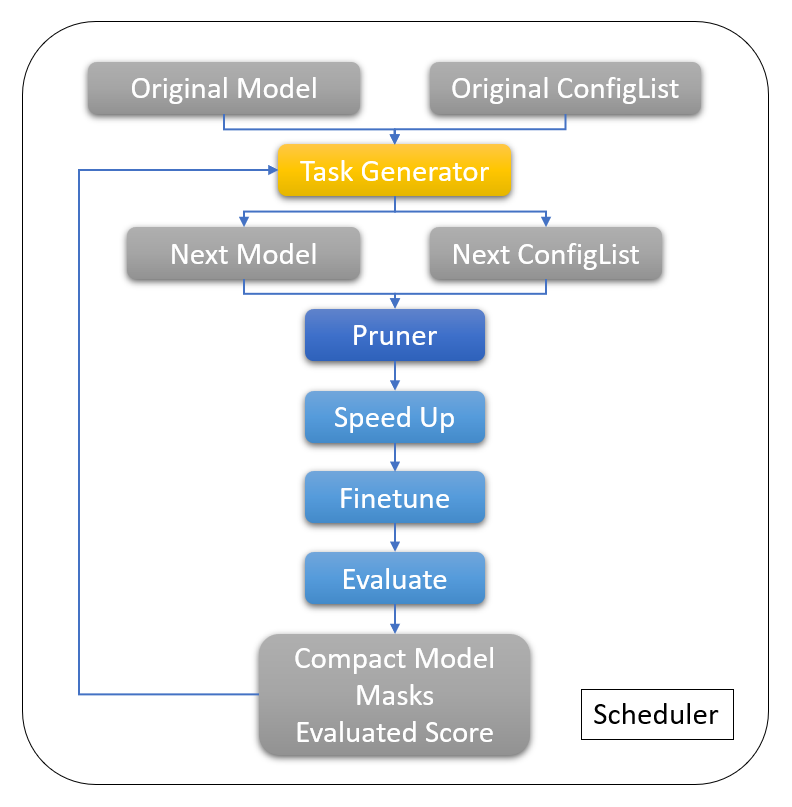

docs/img/pruning_process.png

0 → 100644

55.9 KB