Merge pull request #3580 from microsoft/v2.2

[do not Squash!] Merge V2.2 back to master

Showing

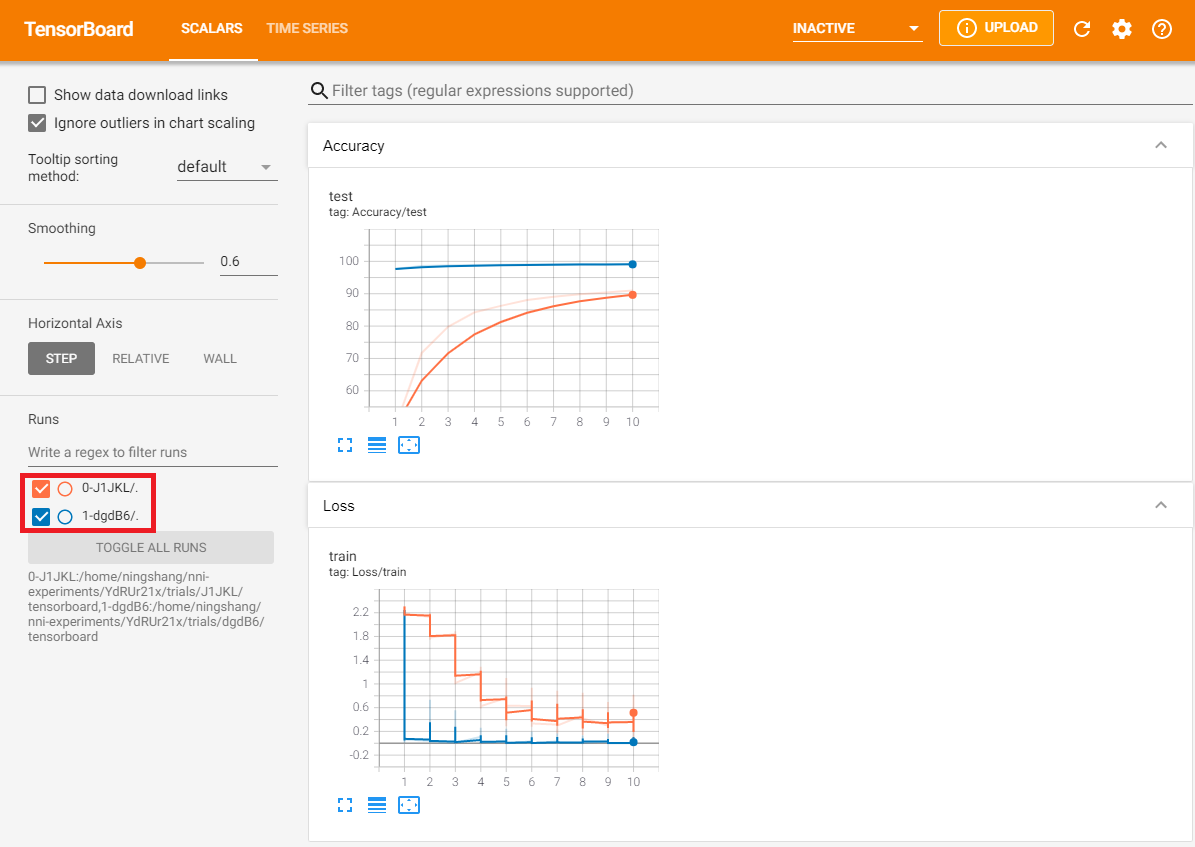

docs/img/Tensorboard_3.png

0 → 100644

93.2 KB

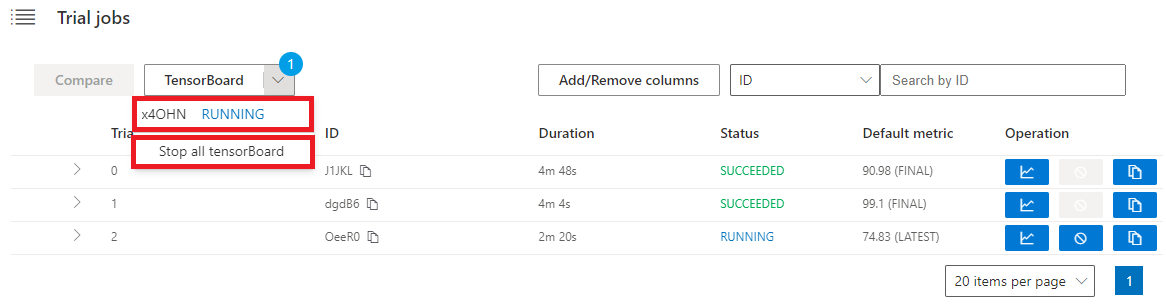

docs/img/Tensorboard_4.png

0 → 100644

30.8 KB

[do not Squash!] Merge V2.2 back to master

93.2 KB

30.8 KB