Merge pull request #57 from UnicornChan/develop-0.1.3

[feature] release 0.1.3

Showing

109 KB

17.3 KB

118 KB

131 KB

157 KB

284 KB

209 KB

137 KB

125 KB

181 KB

147 KB

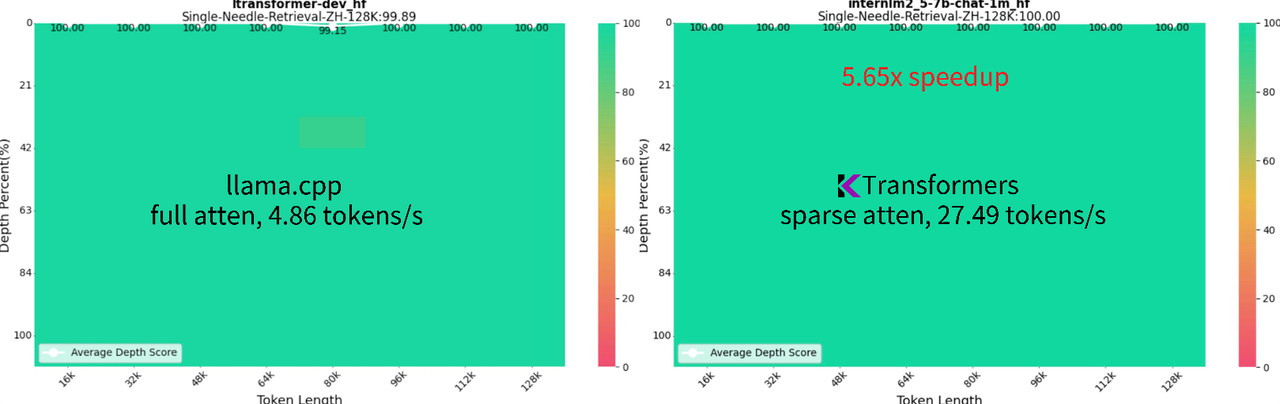

doc/assets/needle_128K.png

0 → 100644

135 KB

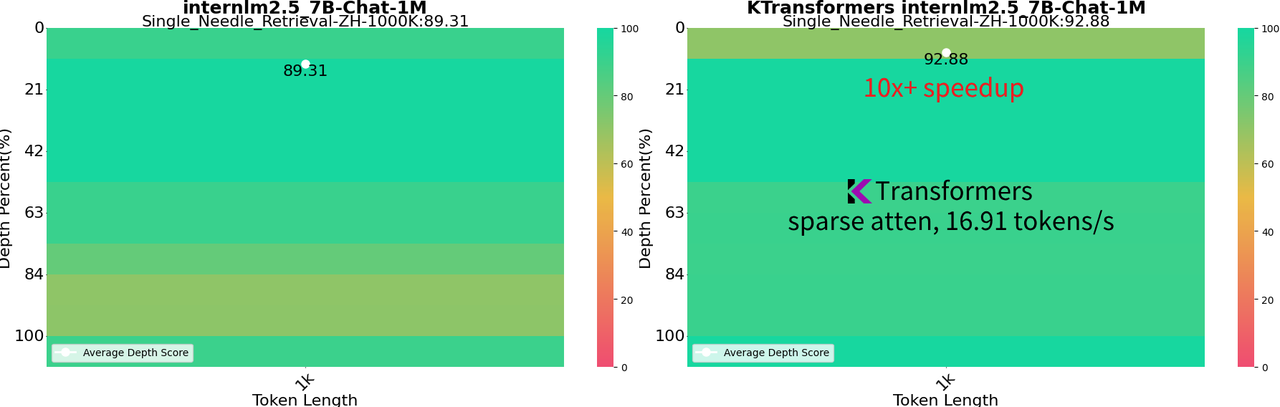

doc/assets/needle_1M.png

0 → 100644

101 KB

This diff is collapsed.