[feat] layer memory tracking (#808)

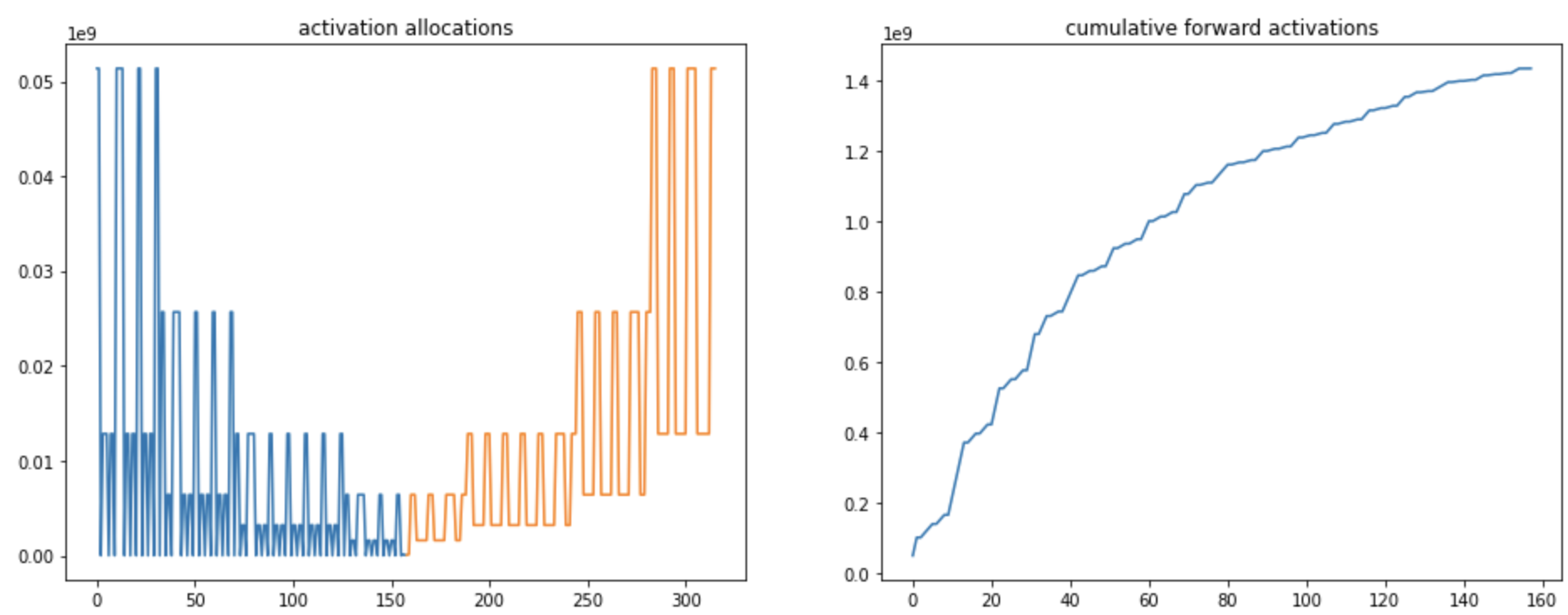

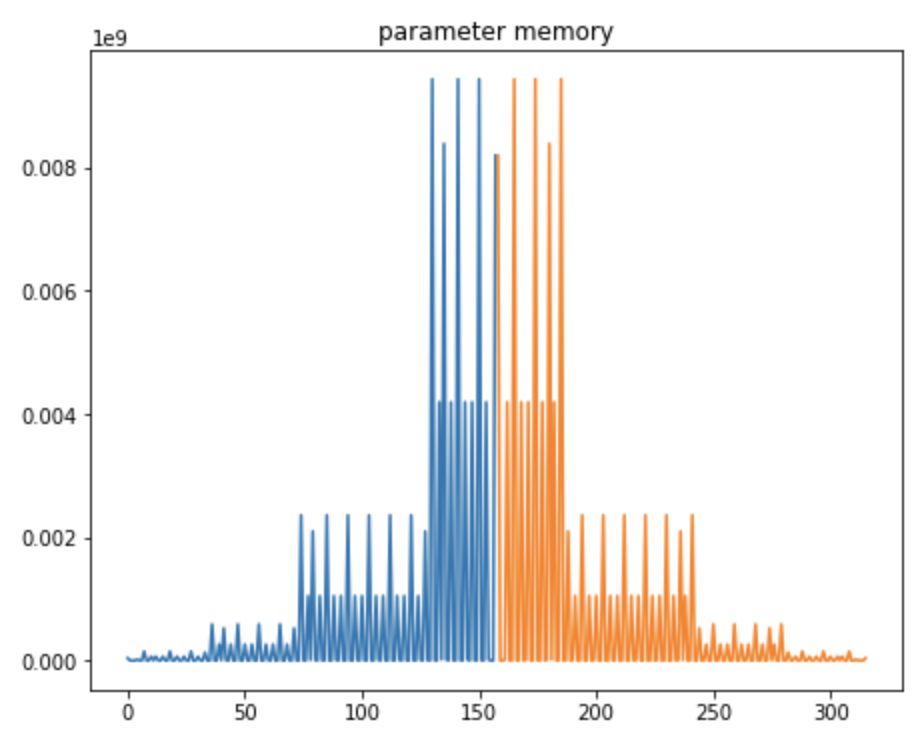

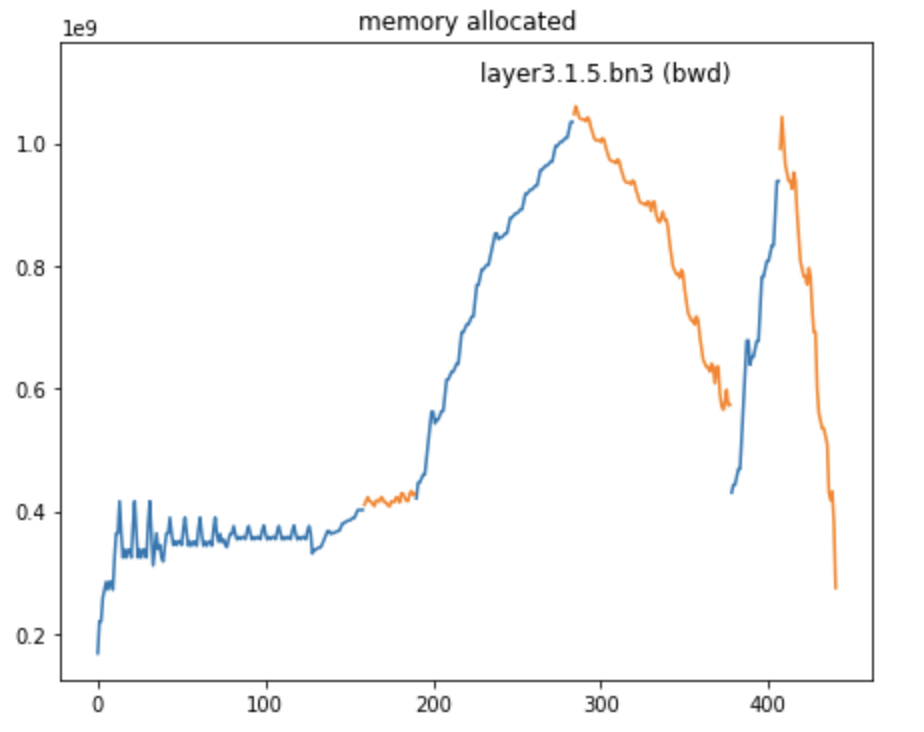

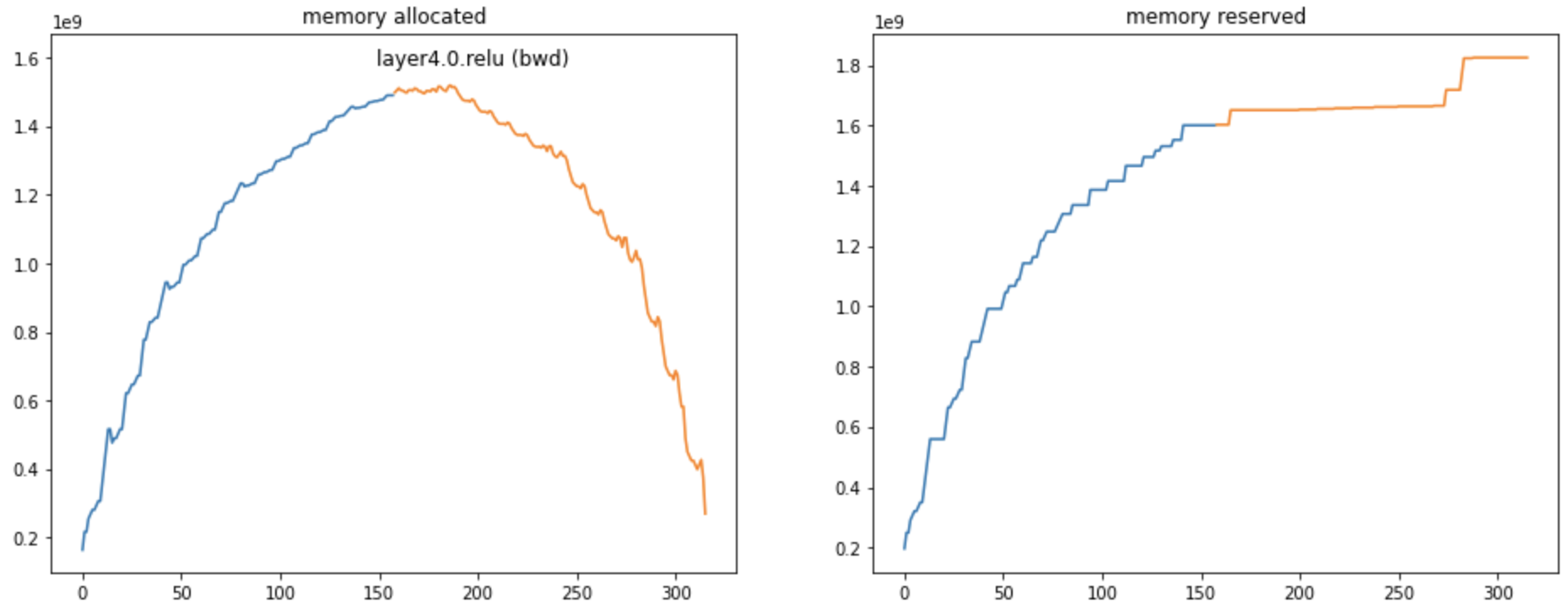

* [feat] layer memory tracking

* [feat] layer memory tracking (add tests in CI)

* [feat] layer memory tracking: doc typos

* [feat] layer memory tracking: mypy fixes

* [feat] layer memory tracking: fixes for FSDP all gather tracking on pytorch 1.9 and above

* [feat] layer memory tracking: lint

* [feat] layer memory tracking: mypy

Co-authored-by:  QuentinDuval <QuentinDuval@users.noreply.github.com>

QuentinDuval <QuentinDuval@users.noreply.github.com>

Showing

354 KB

226 KB

118 KB

This diff is collapsed.