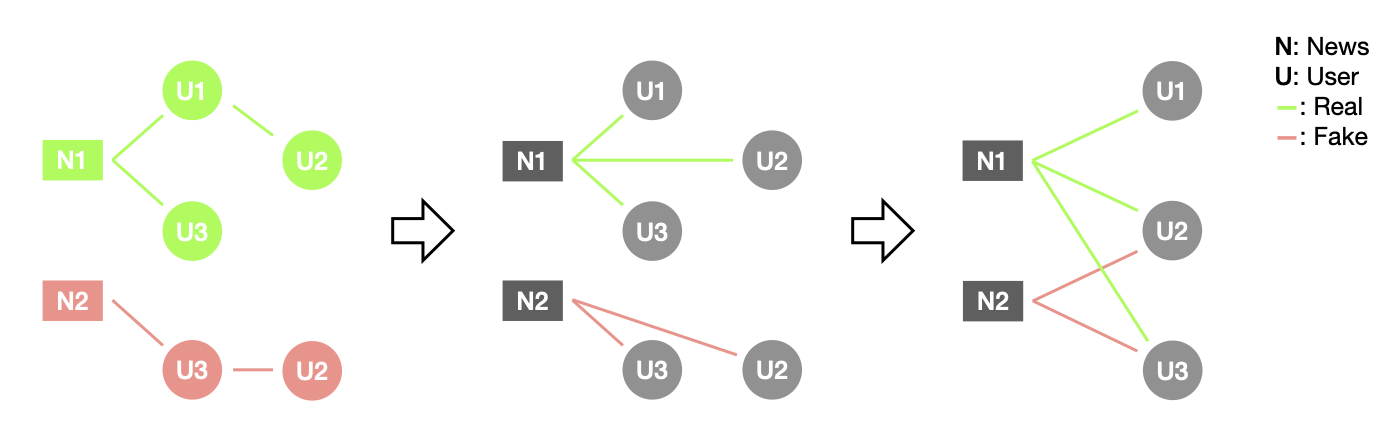

[Model] add model example GCN-based Anti-Spam (#3145)

* add model example GCN-based Anti-Spam

* update example index

* add usage info

* improvements as per comments

* fix image invisiable problem

* add image file

Co-authored-by:  zhjwy9343 <6593865@qq.com>

zhjwy9343 <6593865@qq.com>

Showing

examples/pytorch/gas/main.py

0 → 100644

70.1 KB