push v0.1.3 version commit bd2ea47

Showing

Too many changes to show.

To preserve performance only 424 of 424+ files are displayed.

89.3 KB

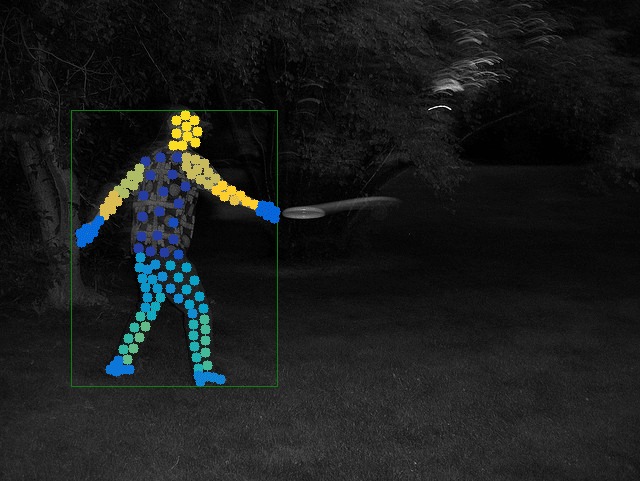

153 KB

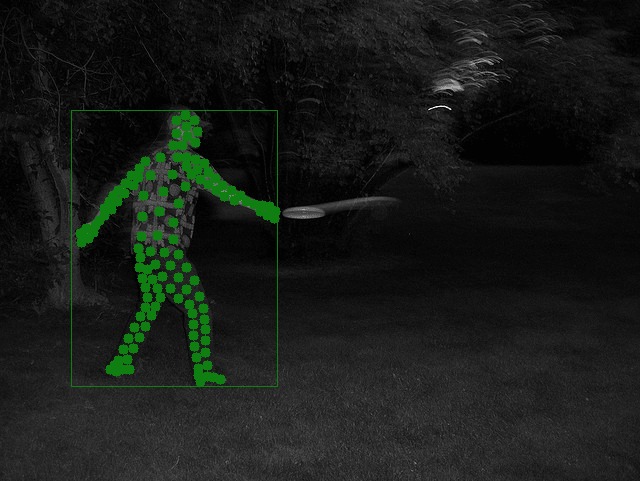

155 KB

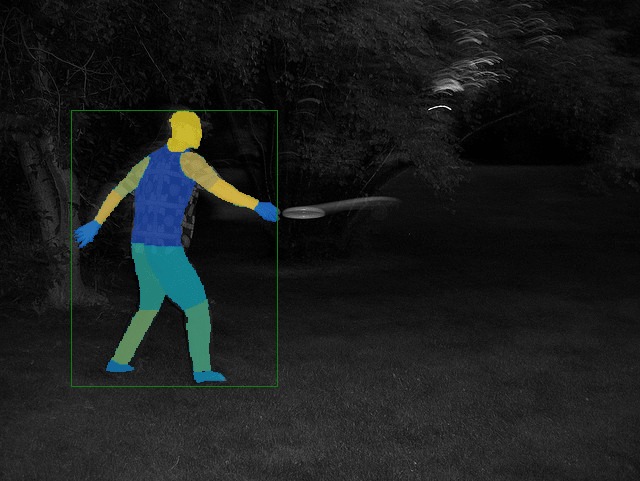

154 KB

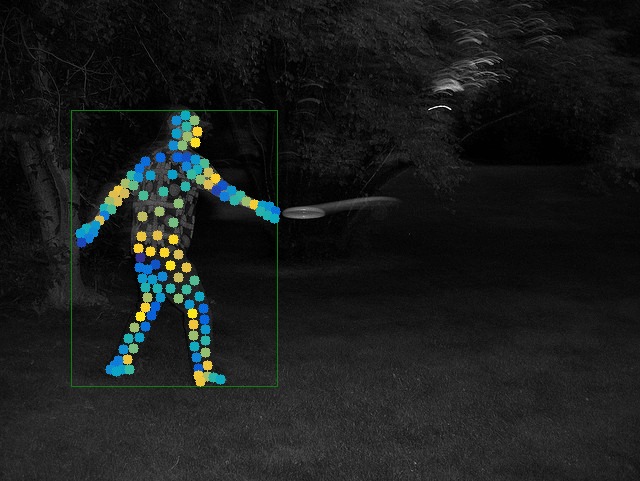

84.2 KB

81.4 KB

77.7 KB

85 KB

85 KB

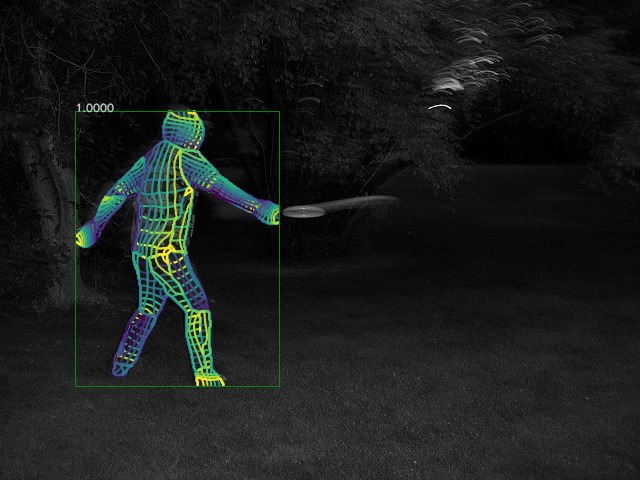

89.3 KB

153 KB

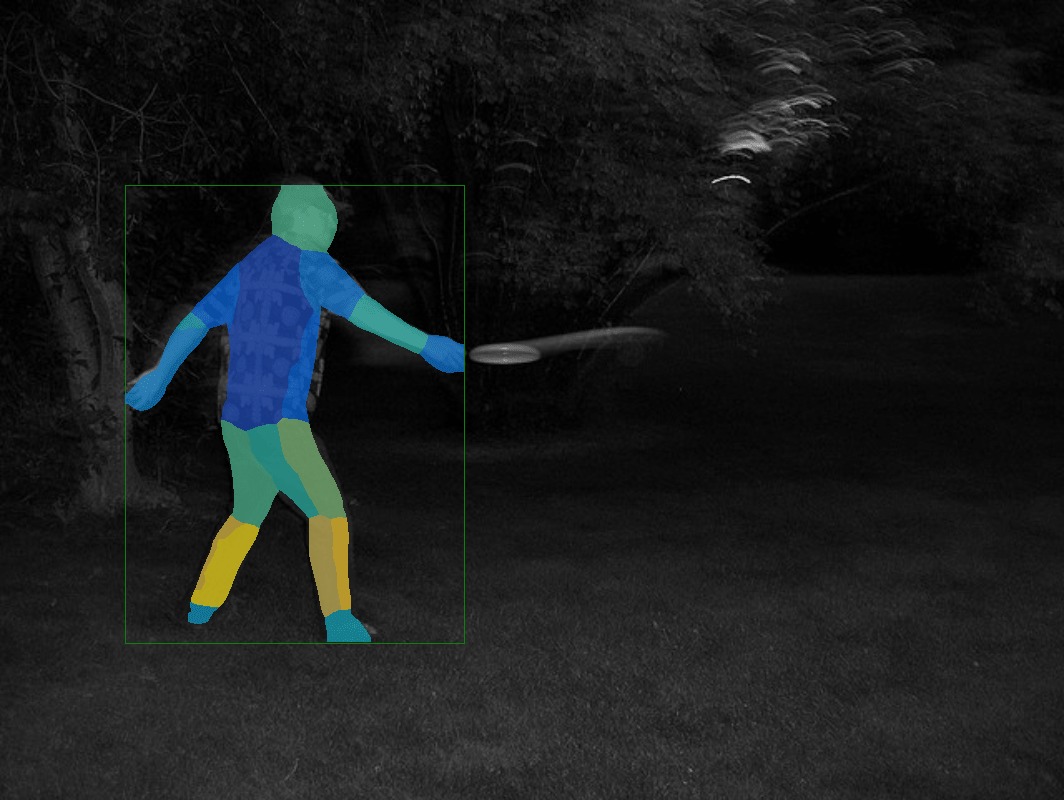

155 KB

154 KB

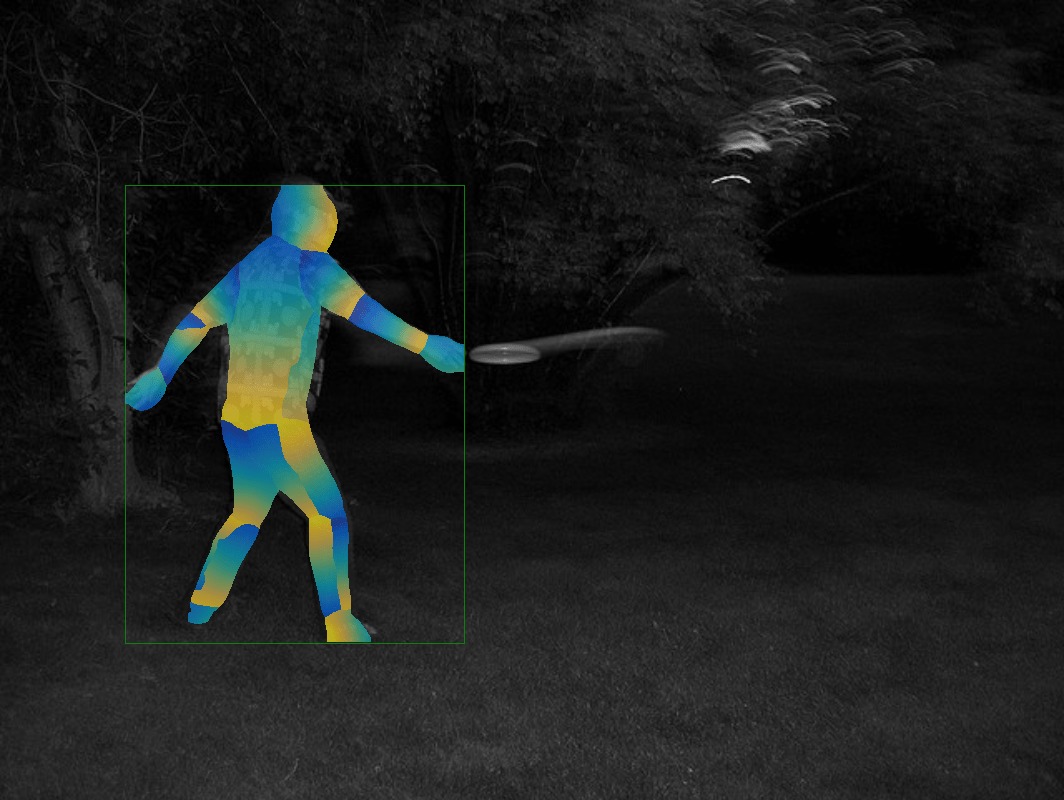

84.2 KB

81.4 KB

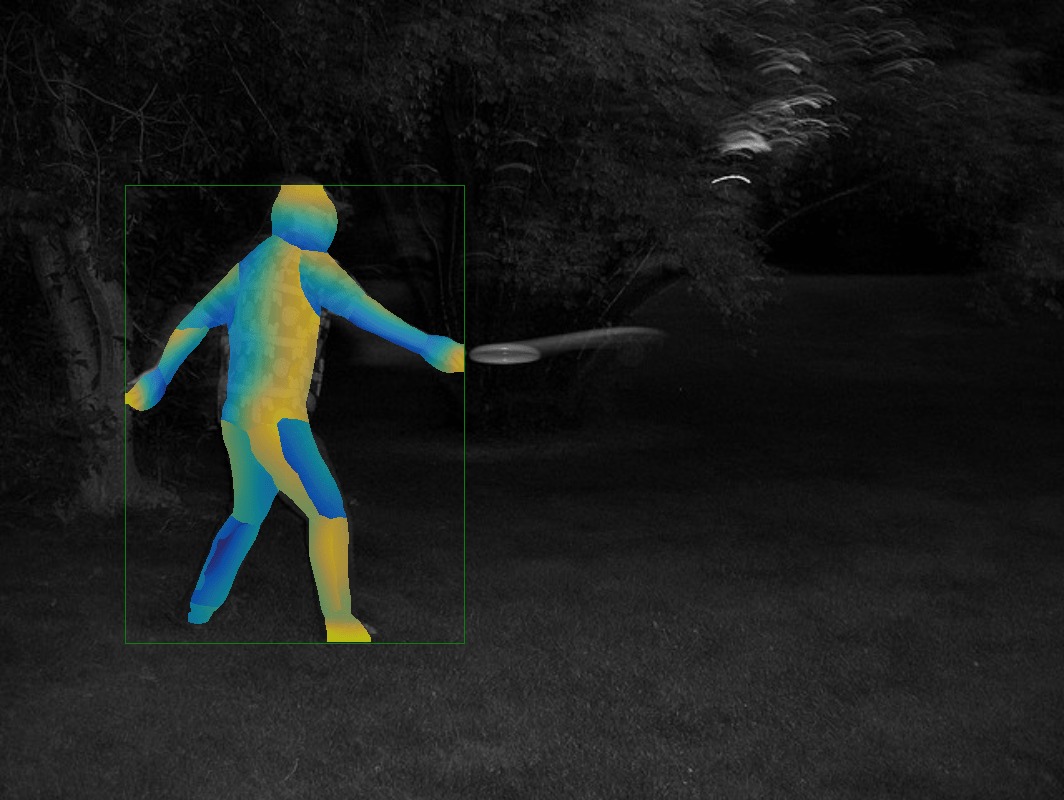

77.7 KB

85 KB

85 KB