docs: remove the images to reduce the repo size (#329)

* replace the assets * move some assets to huggingface * update * revert back * see if the attachment works * use the attachment link * remove all the images

Showing

520 KB

603 KB

961 KB

436 KB

26.5 KB

619 KB

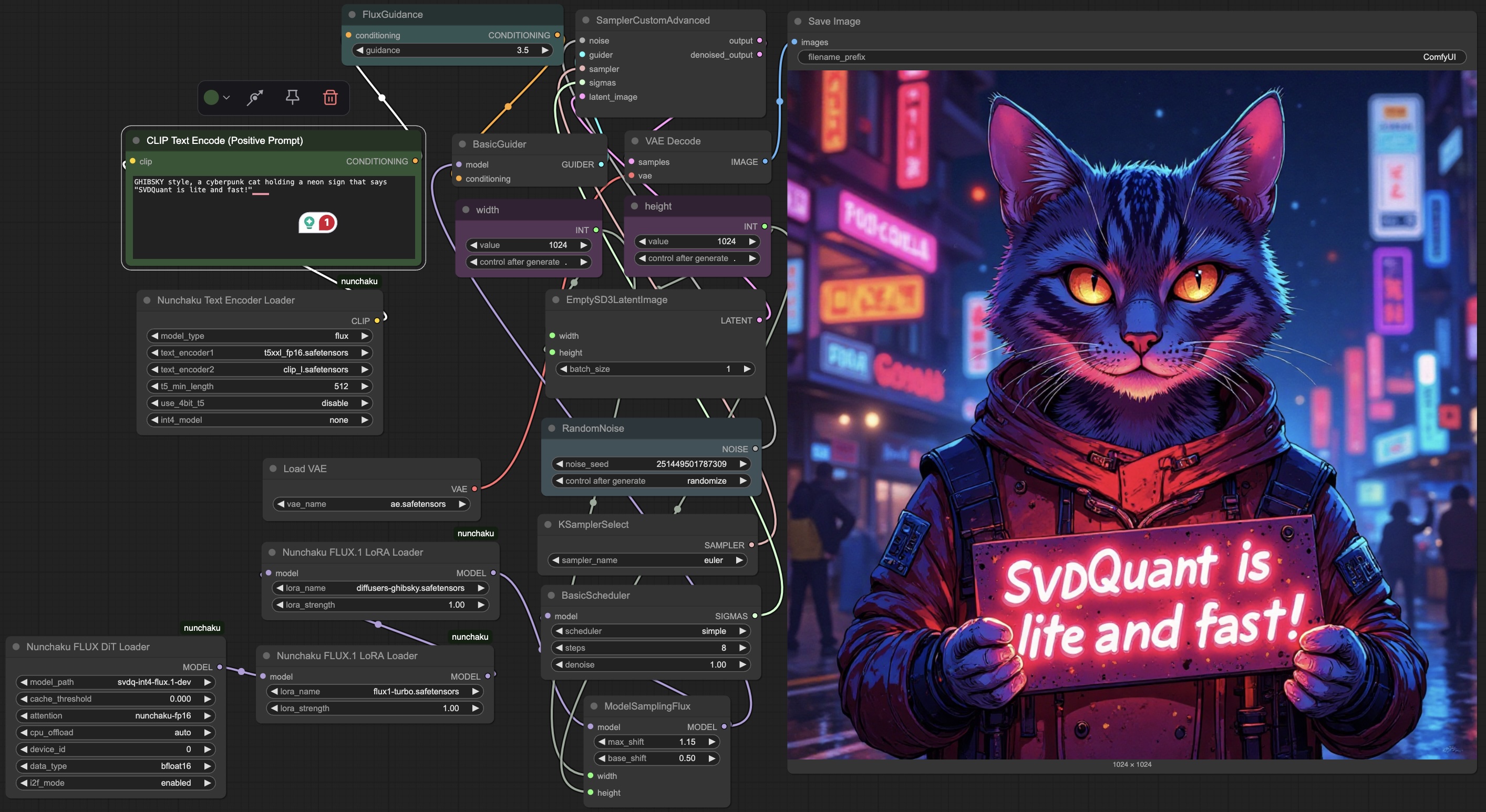

assets/comfyui.jpg

deleted

100644 → 0

968 KB

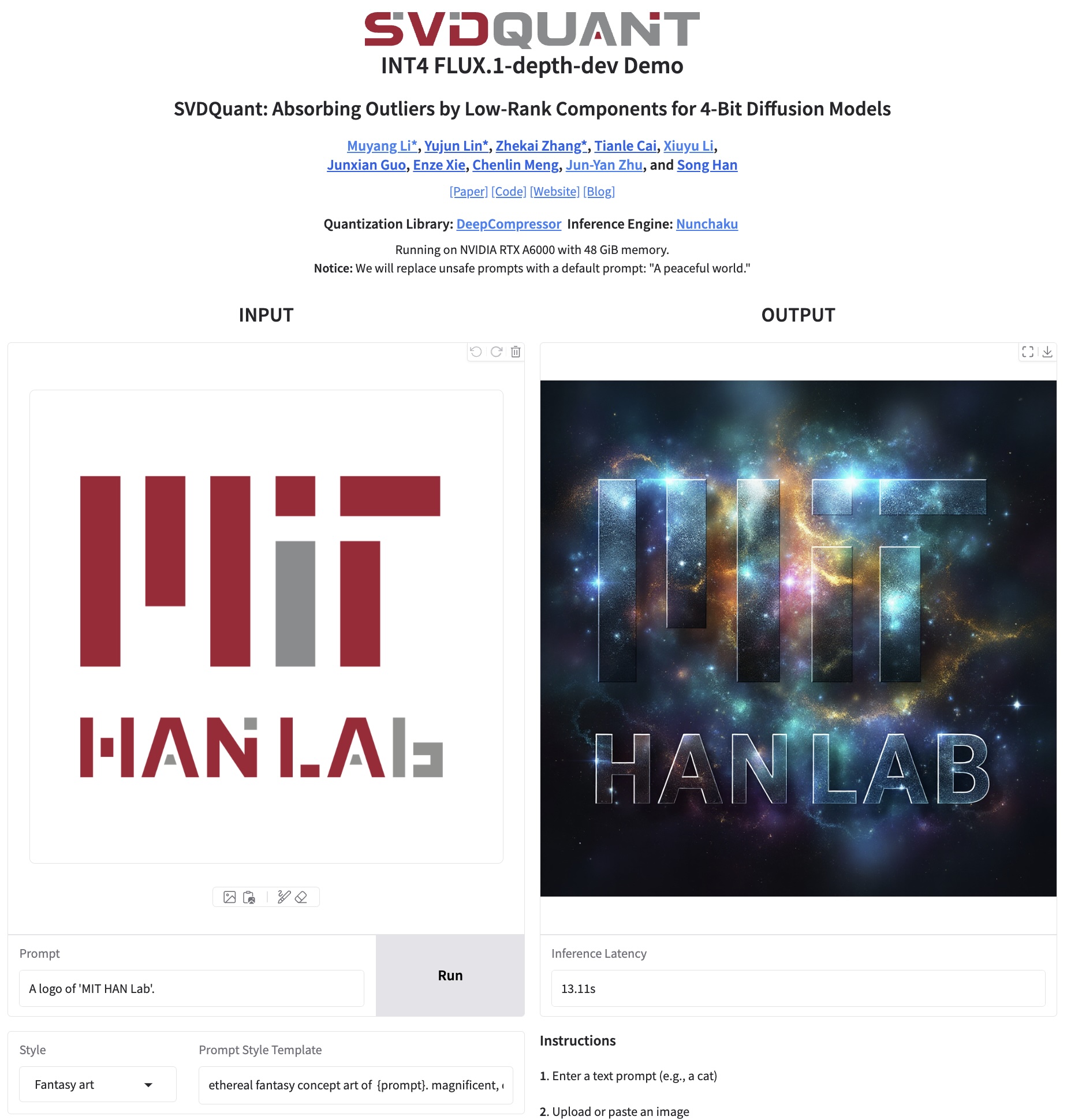

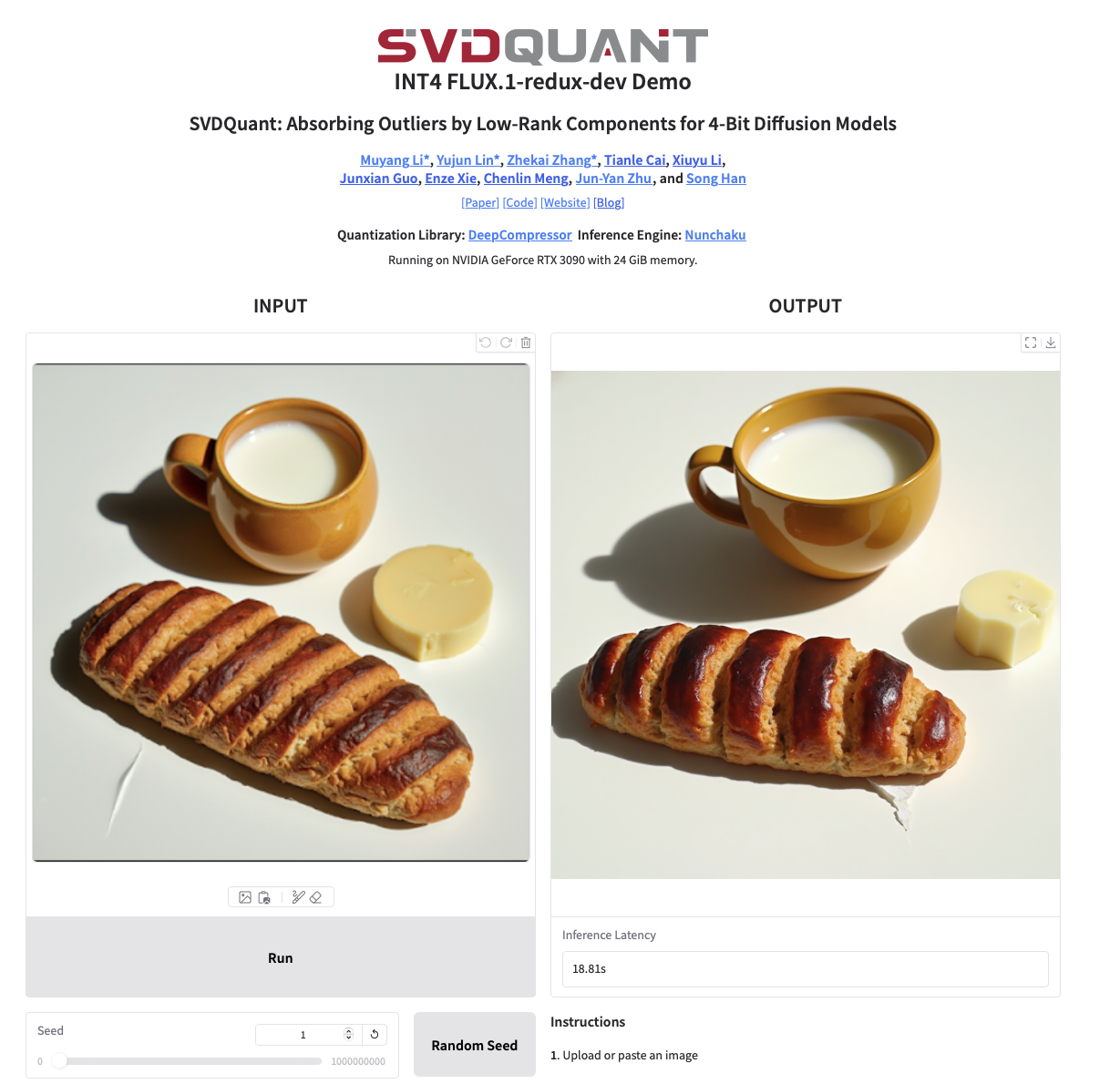

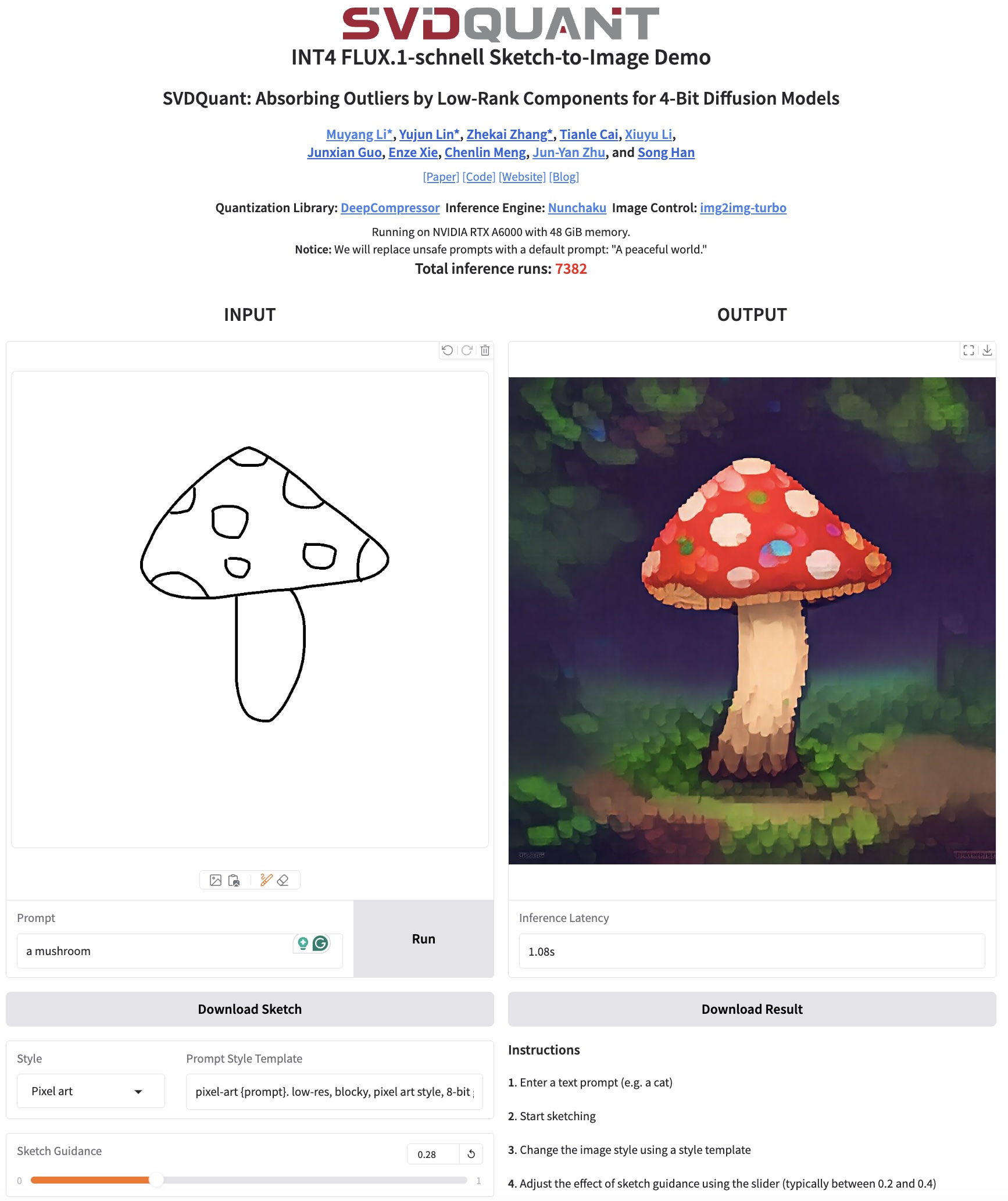

assets/control.jpg

deleted

100644 → 0

363 KB

assets/demo.gif

deleted

100644 → 0

This image diff could not be displayed because it is too large. You can view the blob instead.

275 KB

assets/engine.jpg

deleted

100644 → 0

128 KB

assets/intuition.gif

deleted

100644 → 0

287 KB