Initial release

parents

Showing

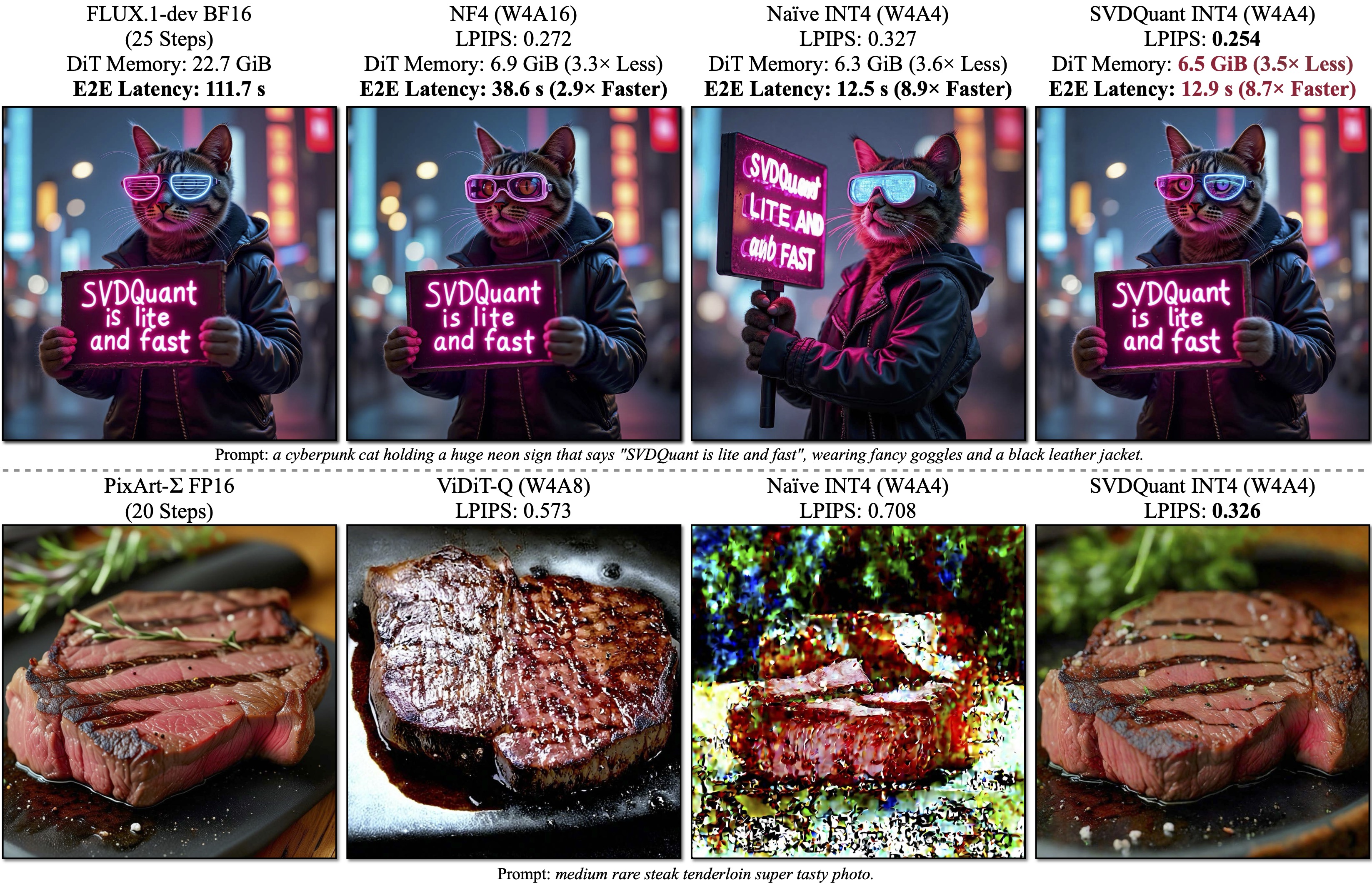

assets/teaser.jpg

0 → 100644

1.68 MB

dev-scripts/dump_flux.py

0 → 100644

dev-scripts/eval_perf.sh

0 → 100755

dev-scripts/fakequant.py

0 → 100644

dev-scripts/qmodule.py

0 → 100644

dev-scripts/run_flux.py

0 → 100644

example.py

0 → 100644

nunchaku/__init__.py

0 → 100644

nunchaku/__version__.py

0 → 100644

nunchaku/csrc/flux.h

0 → 100644

nunchaku/csrc/gemm.h

0 → 100644

nunchaku/csrc/pybind.cpp

0 → 100644

nunchaku/models/__init__.py

0 → 100644

nunchaku/models/flux.py

0 → 100644