Merge branch 'main' of github.com:mit-han-lab/nunchaku into dev

Showing

README_ZH.md

0 → 100644

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

docs/setup_windows.md

0 → 100644

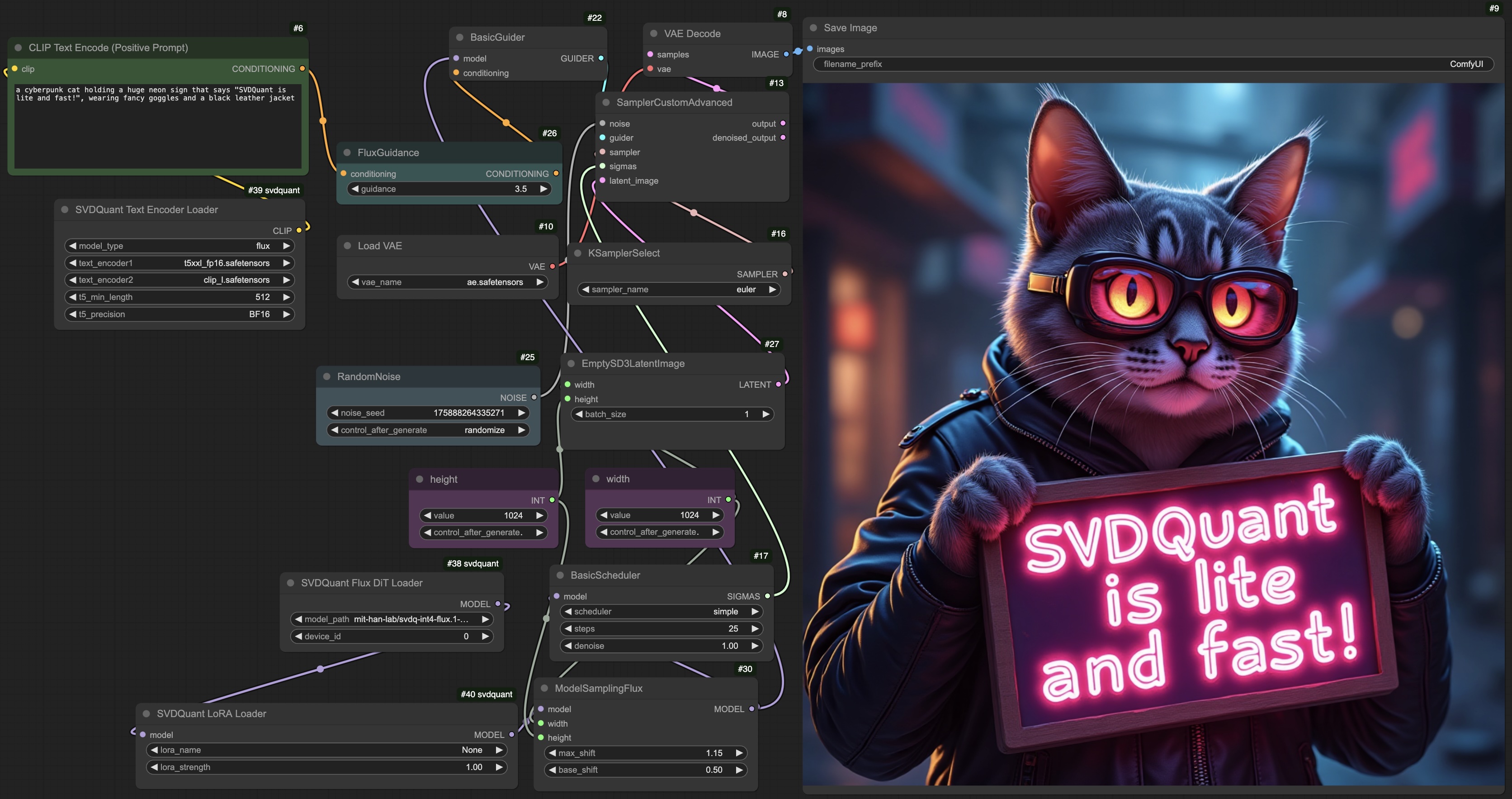

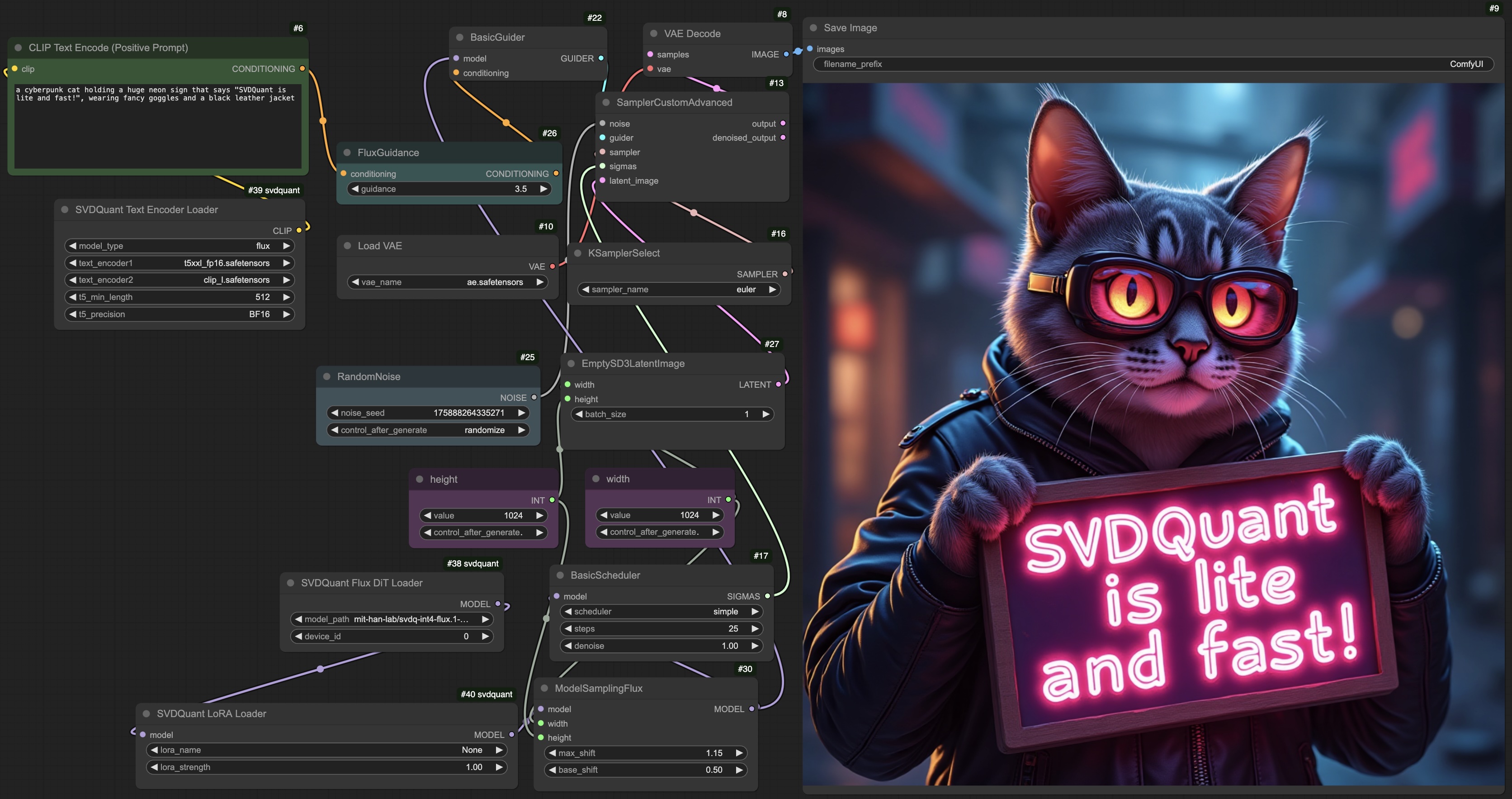

970 KB | W: | H:

968 KB | W: | H:

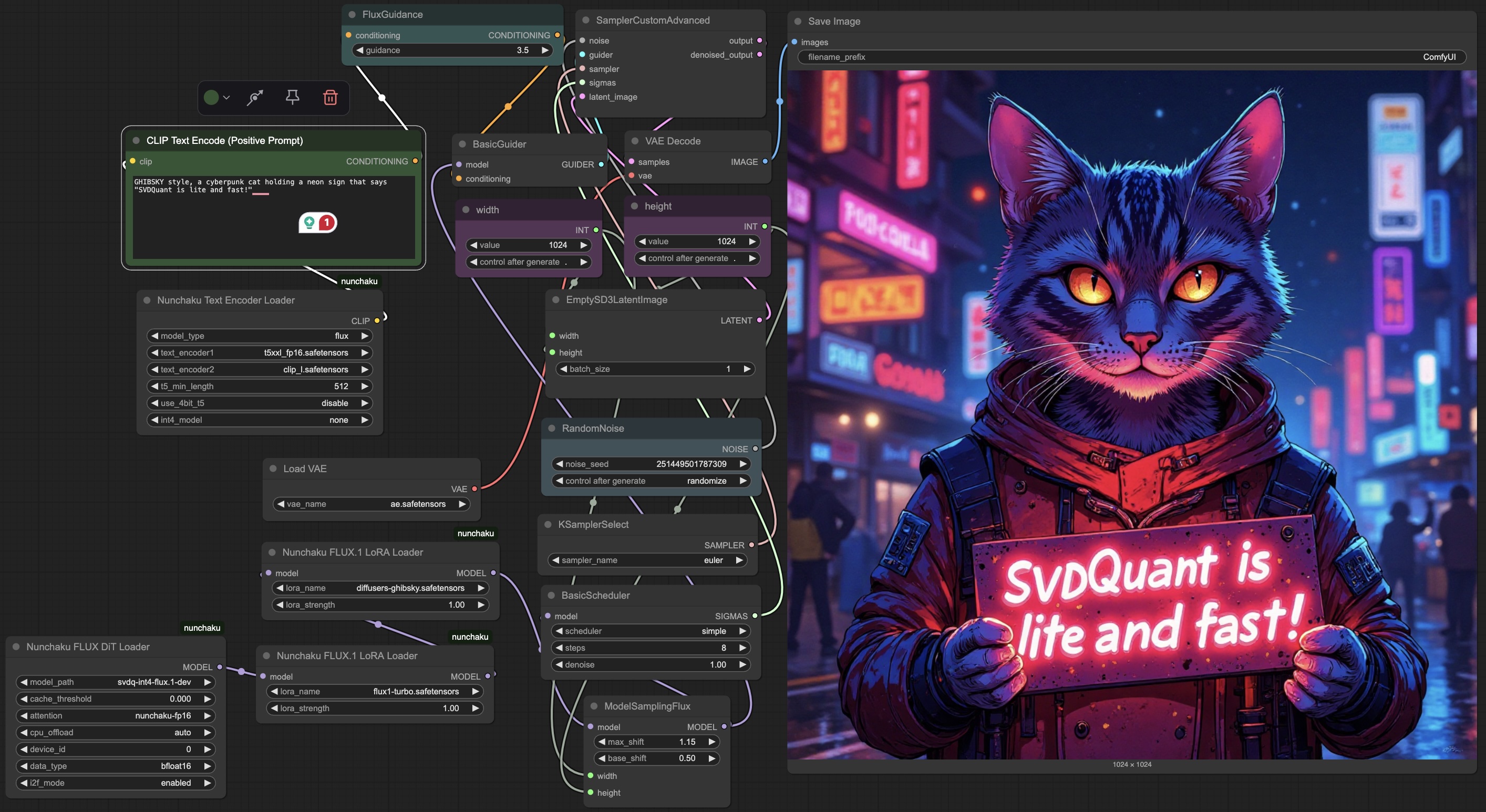

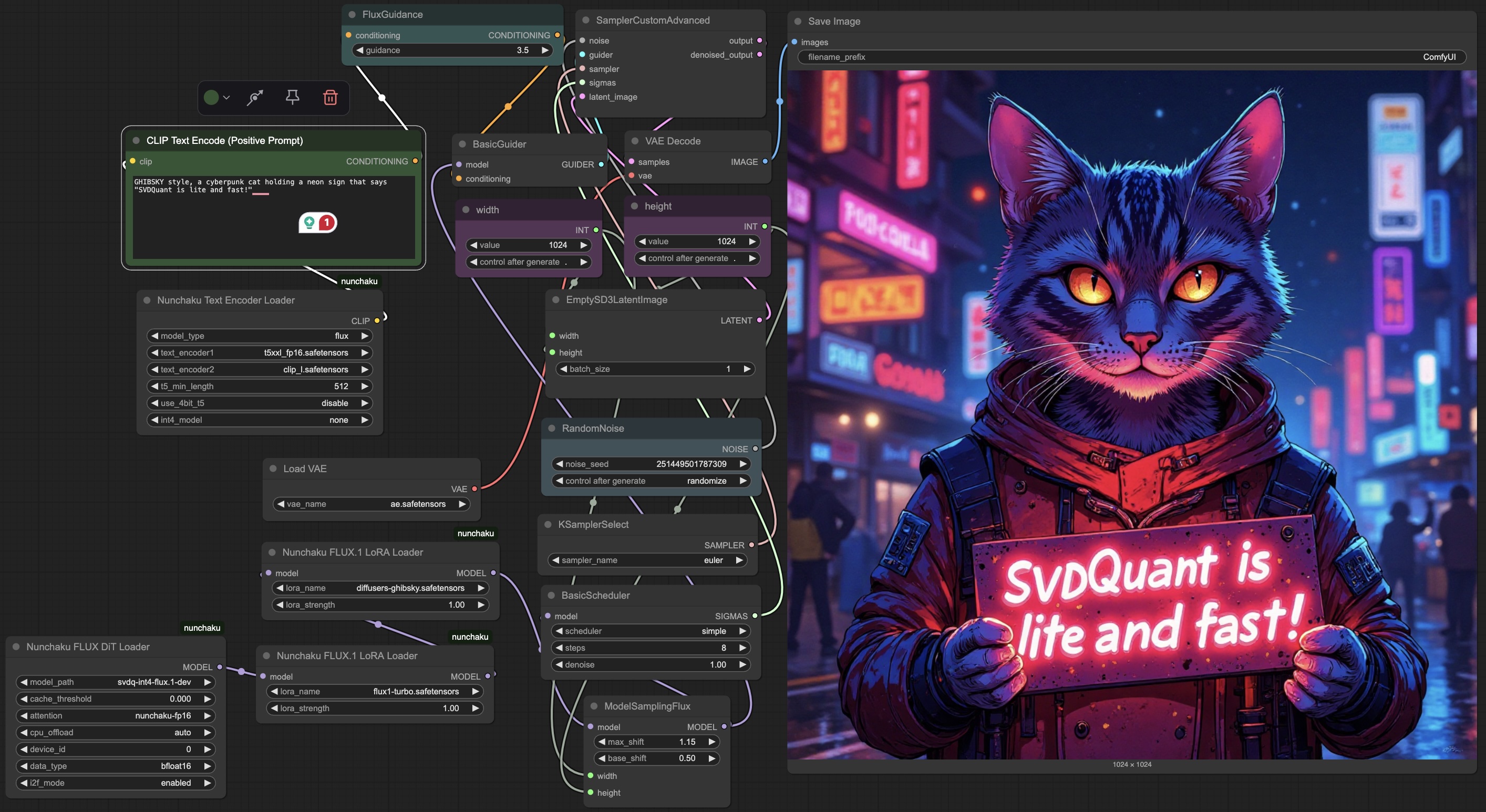

113 KB | W: | H:

275 KB | W: | H:

154 KB | W: | H:

157 KB | W: | H: