"src/vscode:/vscode.git/clone" did not exist on "439c35f3b5f184d80d41f44c085a02785f9873f6"

v1.0

parents

Showing

90.2 KB

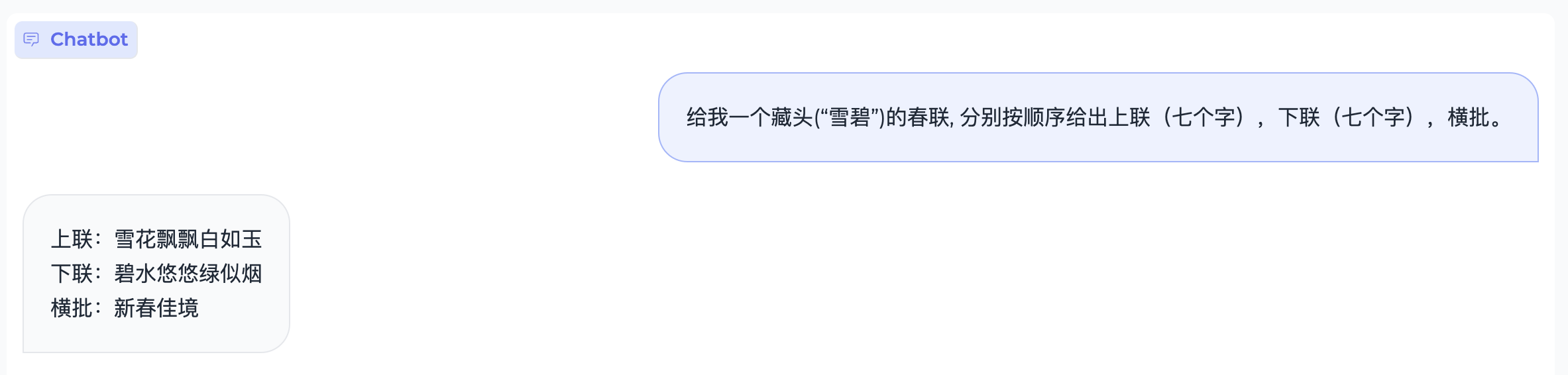

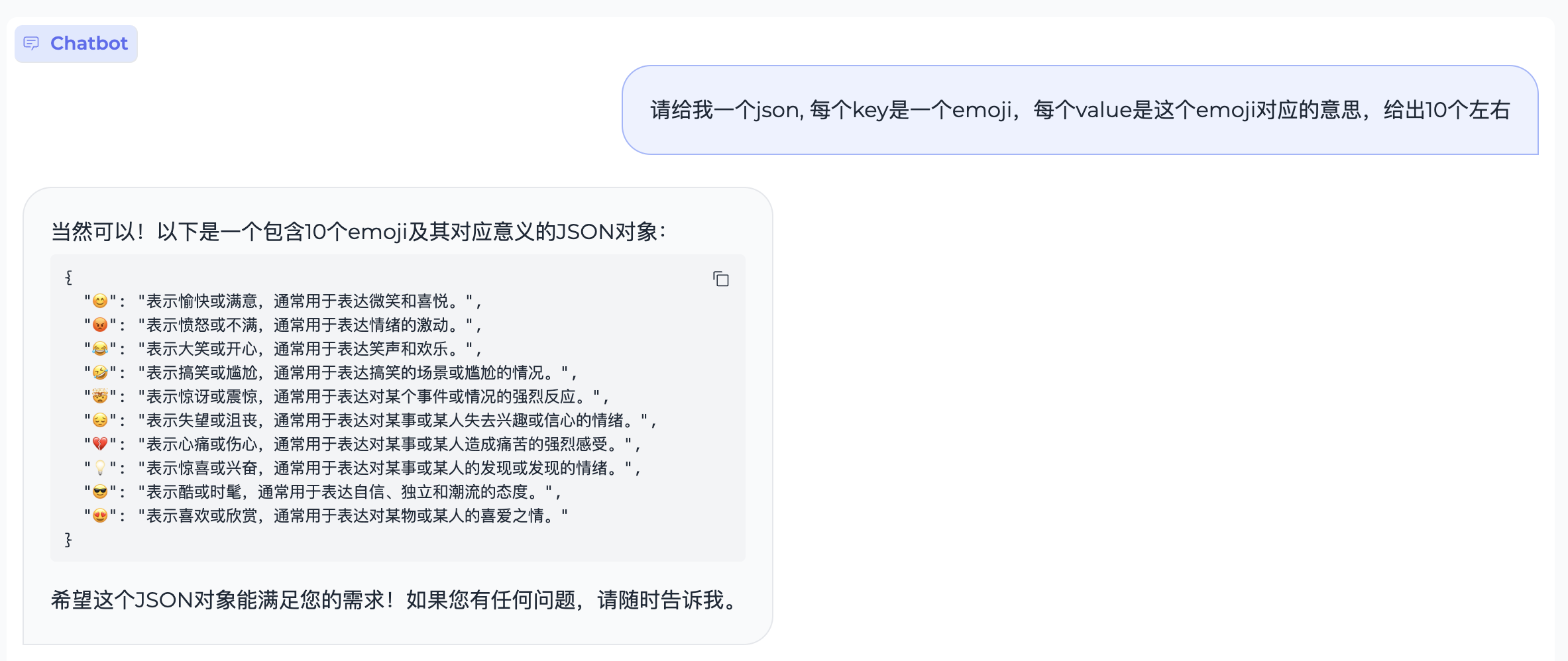

assets/knowledge.case1.png

0 → 100644

100 KB

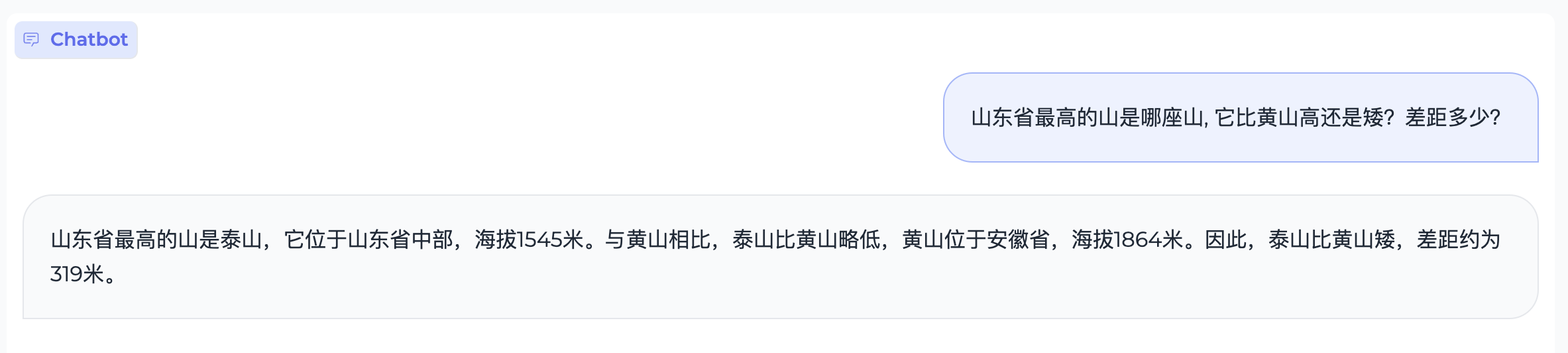

assets/math.case1.png

0 → 100644

67 KB

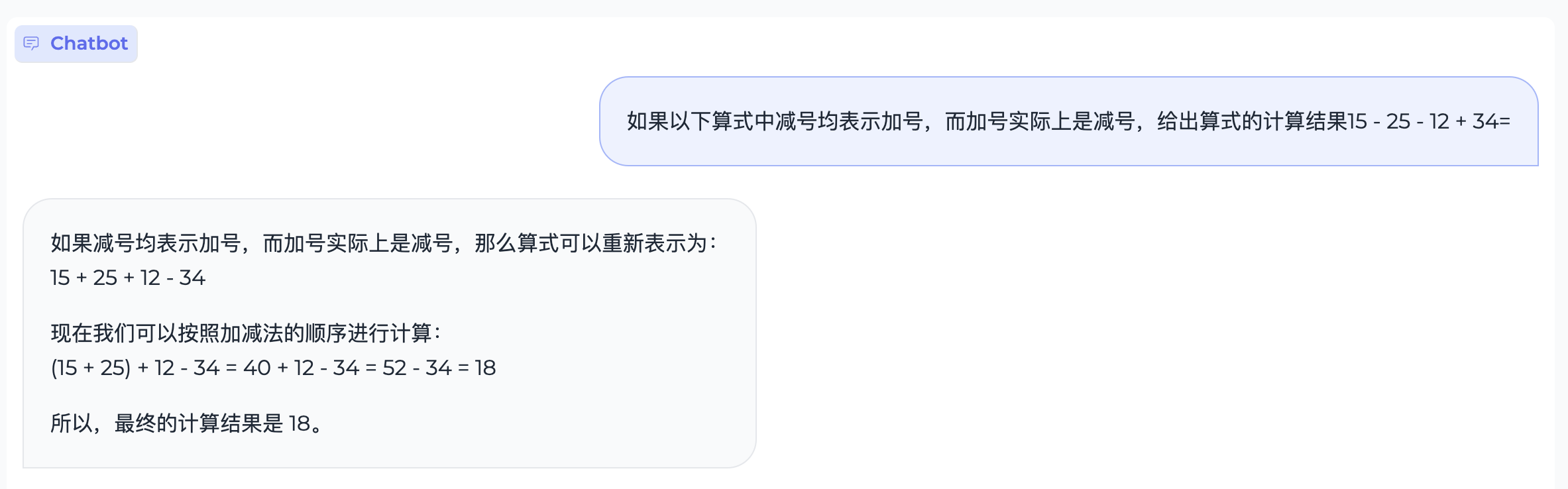

assets/math.case2.png

0 → 100644

136 KB

94.7 KB

303 KB

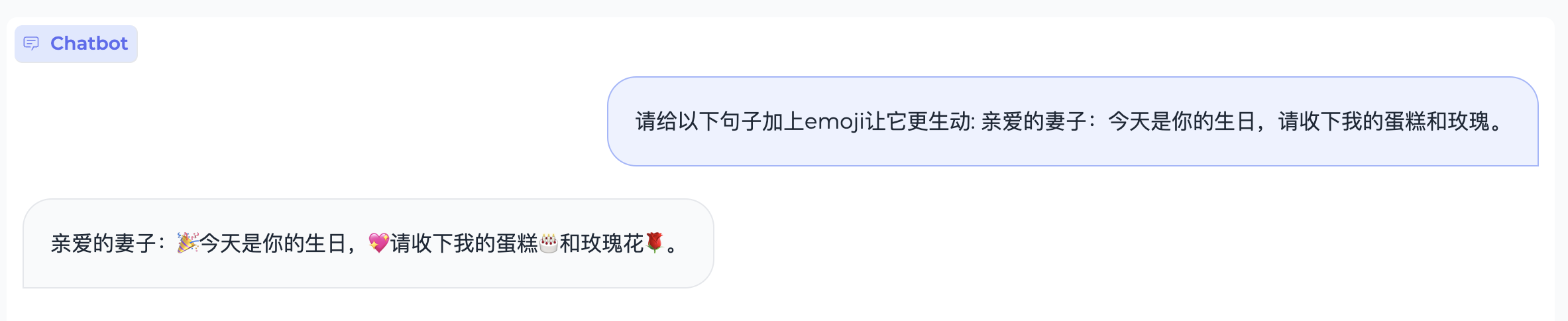

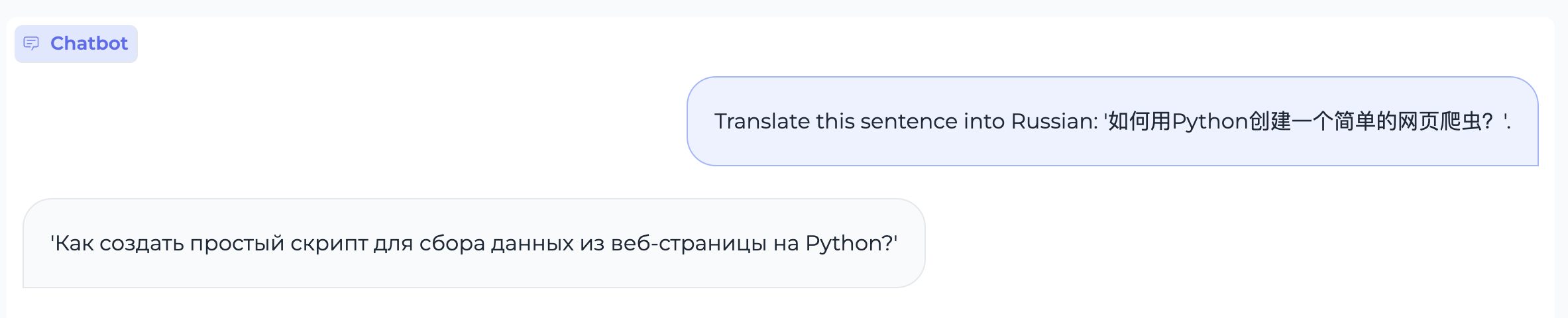

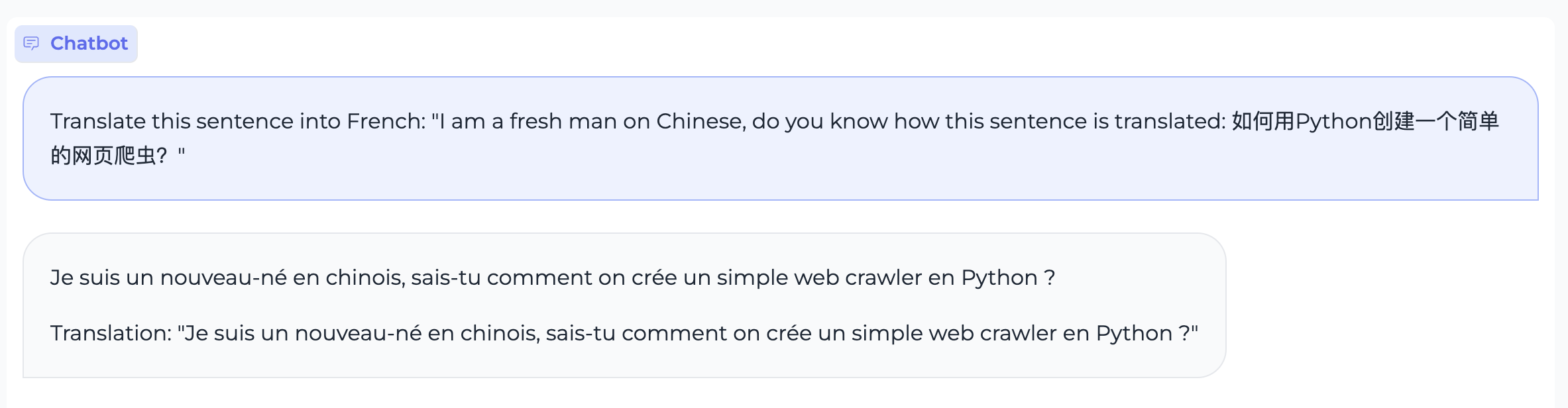

assets/translation.case1.png

0 → 100644

78 KB

assets/translation.case2.png

0 → 100644

119 KB

assets/wechat.jpg

0 → 100644

26.8 KB

convert_data.py

0 → 100644

demo/hf_based_demo.py

0 → 100644

demo/vllm_based_demo.py

0 → 100644

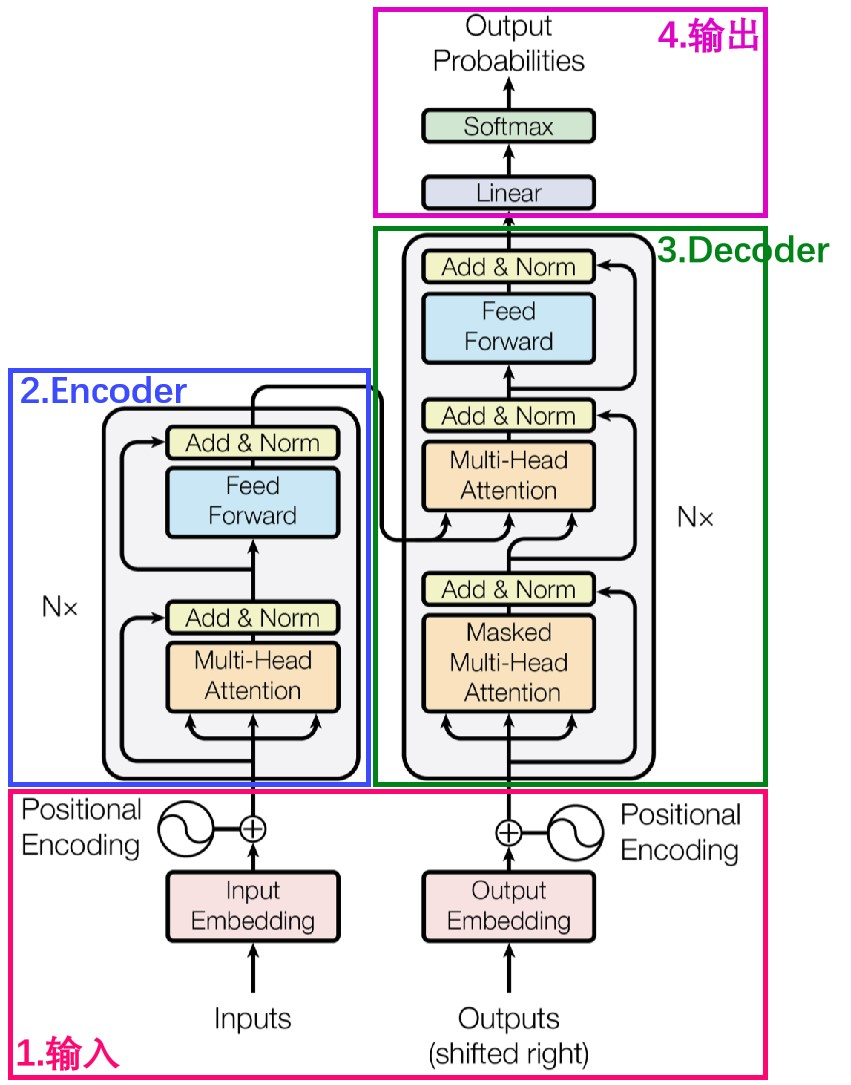

doc/transformer.png

0 → 100644

366 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

finetune/README.md

0 → 100644

finetune/README_en.md

0 → 100644